The Chain of Thought Decentralized AI Canon is my running attempt to chart where AI and crypto collide, overlap, and fuse into something new.

Each Canon zooms in on a different layer of this economy (trust, data, cognition, coordination, robotics) and then pans out to show how they connect into a single system of intelligence and incentive.

The Canons shift as the terrain does. New essays, data, and debates reshape the map every time we learn something worth revising.

Subscribe if you want to follow this live experiment in mapping the intelligent economy.

TL;DR

Public text is almost tapped out while the highest-signal private streams stay locked away behind paywalls, APIs, and privacy barriers.

Crypto data networks address this using three primary approaches: decentralized web scraping (e.g., Grass), user-consented private data aggregation (e.g., Vana), and on-demand synthetic data generation (e.g., Dria).

These networks leverage crypto primitives like tokens for incentives, blockchains for verifiable provenance, and DAOs for community governance.

Sustainable business models require focusing on utility-driven applications and moving up the value chain beyond raw data sales.

Key challenges include bootstrapping early networks, ensuring data quality, and overcoming enterprise skepticism.

The ultimate vision is a living data economy. Networks that secure credible, high-fidelity data now will dictate training speed, model performance, and capture the largest share of future AI value.

There’s a growing tension in AI: we’re racing toward ever more powerful models, yet running low on what matters most: high-signal training data. Not in quantity, but in quality.

According to Epoch AI, the largest training runs could deplete the world’s supply of public human-generated text—around 300 trillion tokens—by 2028. Some forecasts suggest we hit that wall as early as 2026, especially with overtraining.

And as Ethan Mollick notes, even vast amounts of niche text (like terabytes of amateur fiction) barely move the needle. The easy data is gone. We’ve scraped Wikipedia, drained Reddit, and mined Common Crawl. What’s left offers diminishing returns.

So we hit a paradox: while model capabilities continue to leap ahead, the availability of the right kind of data narrows. And as it does, the price and importance of quality, high-fidelity data skyrockets.

This is where things get interesting.

The Data We Need Now (And Why It's Locked Away)

People often say, “Data is the new oil”.

But this oversimplifies the challenge.

Oil is static and interchangeable. Data is dynamic, contextual, and deeply tied to how it’s sourced and used.

Here’s the new hierarchy of AI-critical data:

Category | Sources | Typical Barrier |

|---|---|---|

Private & niche | Hospital imaging archives, manufacturing telemetry | Institutional silos, privacy law |

Net-new domains | Robot teleoperation, agent interaction logs | Needs bespoke collection pipelines |

Real-time streams | Market order books, social firehoses, supply-chain IoT | Latency and licensing costs |

Expert-annotated | Radiology scans with specialist labels | Expensive, slow, hard to scale |

Private and Niche Datasets: The highest-signal data is locked behind institutional walls: health records, genomics, financial histories, factory telemetry, proprietary R&D. They’re fragmented and often siloed.

Net New Data for Emerging Domains: You can’t train a household robot on Reddit. Robotics needs teleoperation, sensor data, and real-world context. All of this doesn’t exist in volume yet and must be actively generated through purpose-built pipelines.

Another key area for advancing agentic AI is capturing real action sequences: user clicks, navigation paths, and interaction logs. One example is the Wikipedia clickstream, an anonymized dataset that traces how users move from one article to the next.

Fresh, Real-Time Data: Intelligence needs a feed, not a snapshot. For them to adapt to live markets, we need real-time crawling and streaming.

High-Quality, Expert-Annotated Data: In fields like radiology, law, and advanced science, accuracy depends on expert labeling. Crowd-sourced annotation won’t cut it. This kind of data is expensive + slow + hard to scale, but critical for domain competence.

The era of just scraping the internet is ending.

Web2 Knows This

As AI valuations soared, platforms realized their most valuable asset was user data.

Reddit signed a $60M training deal with Google. X charges enterprises steep fees for API access. OpenAI is striking licensing agreements with publishers like The Atlantic and Vox Media, offering $1M–$5M per archive.

And the people, like you and me, who created that data? We get nothing.

Users generate the content. Platforms monetize it. The rewards accrue to a few centralized players, while the real contributors are left out. It’s a deeply extractive dynamic.

What if this changed?

Crypto x Data = Rebuilding Data Ownership From First Principles

We see three major aggregation strategies take shape around data:

Scraping and labeling public web data

Aggregating user-owned, private data

Generating synthetic data on demand

1. Scrape Public Data, Repackage at Scale

This focuses on harvesting the open web (forums, social platforms, public websites) and turning that raw stream into structured, machine-readable data for AI developers.

The indexed internet holds roughly 10 petabytes of usable data (10,000 TB). When broader public databases are factored in, that figure swells to around 3 exabytes (3,000,000 TB). Add platforms like YouTube videos, and the total exceeds 10 exabytes.

So there’s a lot of data out there.

Source | Estimated Size | Notes |

|---|---|---|

Indexed Web Pages | ~10 petabytes | Estimated based on 4.57 billion pages at 2.2 MB each |

Deep Web Pages | ~100 petabytes | Estimated as 10 times larger than the indexed web |

Public Databases and APIs | ~1-10 exabytes | Genomics, astronomy, climate data, open government portals |

Public File Sharing and Storage | ~1 exabyte | Data from platforms like GitHub, Dropbox, and public repositories. |

Public Multimedia Platforms | ~10 exabytes+ | YouTube. Requires significant processing for AI use beyond transcripts. |

Data is sourced through distributed scraping infrastructure: often, networks of user-run nodes. Once collected, the data is cleaned, lightly annotated, and formatted into structured datasets. These are then sold to model developers looking for affordable data at a fraction of what centralized providers like Scale AI charge.

The competitive edge comes from decentralization, which reduces scraping costs. Projects like Grass and Masa are turning public web data into a permissionless, commoditized resource.

Grass launched in 2024 as a decentralized scraping network built on Solana. Within a year, it grew to over 2 million active nodes. Users install a lightweight desktop app that transforms their device into a Grass node, contributing idle bandwidth to crawl the web.

Each node handles a small chunk of the scraping workload, and together they pull in over 1,300 TB of data daily and growing (see chart above). That data is bundled and sold as a continuous feed to AI companies.

By late 2024, Grass was reportedly generating ~$33 million in annualized revenue from AI clients, which we hear includes some of the big AI research labs we’re all familiar with (speculation, not confirmed).

Over time, it plans to distribute revenue back to node operators and token stakers, essentially treating data monetization as a shared revenue stream.

The vision is bigger than scraping: Grass is aiming to become a decentralized API for real-time data. In the future, it will be launching Live Context Retrieval, allowing clients to query real-time web data from across the network. It will require many more nodes to get to this stage.

Masa is taking a different route through the Bittensor ecosystem, running a dedicated data-scraping subnet (Subnet 42). Its “data miners” collect and annotate real-time web content, delivering data feeds to AI agents. Developers tap Masa to retrieve X/Twitter content to feed directly into LLM pipelines, bypassing costly APIs.

To scale, both Grass and Masa depend on a steady base of reliable node operators and contributors. That makes incentive design a core challenge. Other key challenges:

Very noisy data, prone to bias

Regulatory grey area

Lack of a real competitive moat since data is non-exclusive

2. Private Data, User-Controlled and Monetized

This focuses on unlocking high-value data that lives behind walls: personal, proprietary, and unavailable through public scraping. Think DMs, health records, financial transactions, codebases, app usage, smart device logs.

The core hypothesis: Private data contains deep, high-signal context that can dramatically improve AI performance, if it can be accessed securely with user consent

Crypto AI startups turn private data into an on-chain asset: trackable, composable, and monetizable.

Startups like Vana, OpenLedger, and ORO are building this ecosystem.

Vana has developed a full Layer 1 blockchain for user-owned data. Users can link or upload their data into Data DAOs, which pool similar types of data (e.g., social media, wearable device logs) for specific AI use cases. Over 1.3 million users have contributed to Vana pools, uploading more than 6.5 million data points.

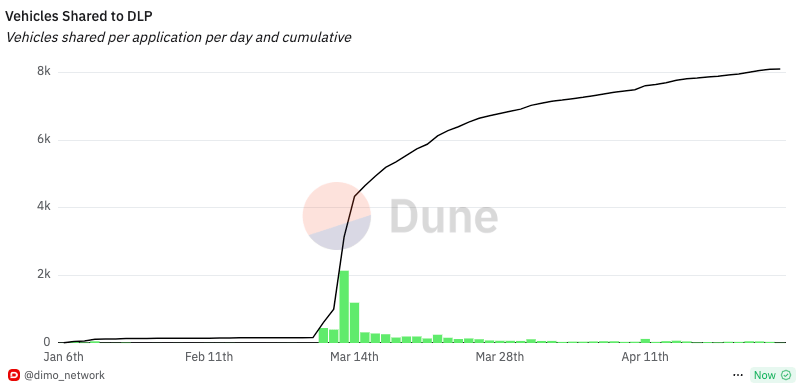

One particular standout: DLP labs, building within the Vana ecosystem. In less than two months, it onboarded over 8,000 vehicles using Dimo devices to share data like mileage, speed, and telemetry. The team is now developing multiple monetization channels around this growing dataset.

ORO takes a more interactive approach. It offers a Data Sharing App where users connect accounts and complete “missions” to contribute specific types of data. For example, a model developer might request e-commerce receipts to train a recommendation system. Users can securely share past Amazon orders, complete surveys, or grant access to shopping patterns, and get paid when their data is used. Everything runs on privacy-preserving infrastructure, including zkTLS (zero-knowledge TLS) and encrypted data vaults.

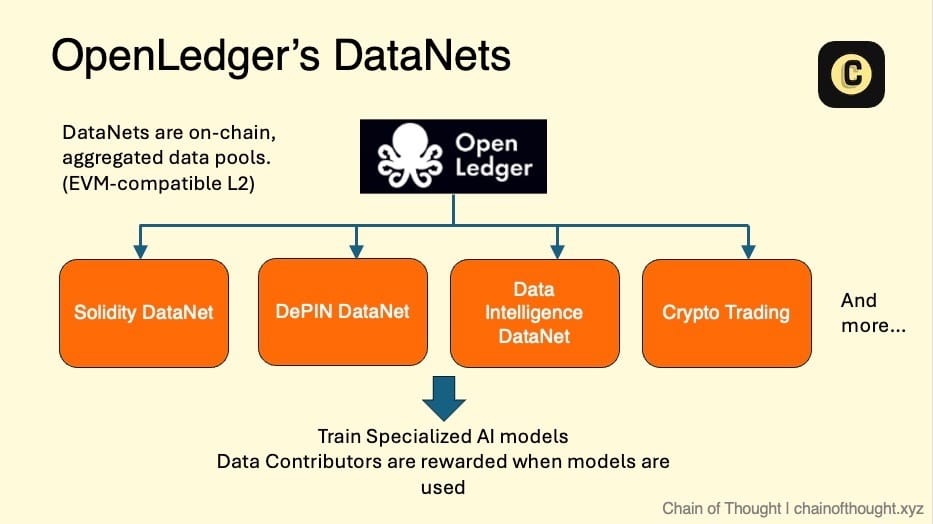

OpenLedger, a protocol for verifiable and attributable AI, runs DataNets where users contribute private data to an on-chain aggregated data pool and earn tokens when it’s used.

Key challenges:

Bootstrapping early users and private datasets takes a lot of time

Incentive design is complex and still evolving

Privacy must be rock-solid to earn trust

Still, if the infrastructure holds, this model offers a compelling answer to one of AI’s core bottlenecks: access to real, contextual, high-quality private data, without compromising ownership or privacy.

3. Synthetic Data, Engineered on Demand

When real-world data is scarce, messy, or expensive, the best move might be to generate it from scratch.

Synthetic data refers to artificially created data (text, images, structured records) that mimic real-world patterns without copying real-world content.

Synthetic data solves several bottlenecks:

Privacy: No personal data involved, reducing regulatory risk.

Scalability: Datasets can be generated overnight, on demand.

Coverage: Synthetic pipelines can fill blind spots, simulate rare edge cases, or balance demographic skew.

Customization: Output can be tuned precisely to model needs.

For high-velocity domains like robotics or agent training, synthetic data is often more useful than real-world data. You can simulate edge cases that haven’t even happened yet.

Platforms like Dria assemble decentralized networks of AI agents to create datasets tailored to specific use cases.

A user might request something like “generate one million biology questions.”

Dria splits the task across a swarm of specialized agents, each handling a part of the workflow: generation, evaluation, formatting, and quality control. The system operates in parallel, often running over 50 models at once, and can produce more than 10,000 tokens per second.

To ensure quality, other nodes serve as validators, verifying accuracy and consistency. Contributors are rewarded in tokens, and blockchain infrastructure tracks execution and payout transparently.

This architecture creates a new kind of data supply chain: cheap, fast, private, and composable.

The natural monetization model is data-as-a-service:

Job-based pricing: Users specify a dataset (e.g., “100K legal dialogue samples”), receive a quote, and the network generates and delivers the data.

Pay-per-output: Developers pay per sample or token.

Marketplaces: Curated prompt or dataset configs can be listed and sold, with Dria taking a cut.

While drafting this, we noticed an interesting development: Dria appears to be quietly moving into building acceleration hardware for latency-sensitive AI workloads.

Synthetic data isn’t a silver bullet, though. Overuse can lead to model collapse, where systems lose grounding in the real world. Generative pipelines can also inherit and amplify bias from the models they rely on. And synthetic datasets can sometimes lack edge-case nuance that only shows up in lived data.

Other key challenges

Requires robust evaluation and feedback loops

Bias amplification and needs grounding in real-world data

We see the future of AI training as a blend of real-world and synthetic data, a pattern already emerging in many recent models.

Real-world data offers edge cases, context, and ground truth. Synthetic data fills in the blanks and adds scale. The art is knowing when to anchor, when to augment, and how to keep that balance from tipping too far in either direction.

💡 One quick note:

These 3 strategies are not mutually exclusive.

A well-designed DataDAO, for instance, might use public data as a foundation, enrich it with opt-in user data, and generate synthetic data to fill in the gaps.

Grass is reportedly building what may become the “largest multimodal synthetic dataset in the world.”

The role of Crypto in Data

The point with Crypto x Data isn’t to naively shove all data “on-chain”. That’s neither scalable nor privacy-friendly.

And frankly, would you want your personal data etched into the blockchain? I wouldn’t.

Instead, crypto offers primitives for constructing new types of data systems that are incentive-aligned and permissionless by design.

So what does that actually look like?

Tokens as Programmable Incentives

High-quality data needs to be gathered, annotated, validated, and maintained. These tasks are often undercompensated. Tokens offer a programmable way to coordinate and reward those efforts across a network.

They turn fragmented workflows into economic systems: contributors earn for uploading data, validators get paid for quality checks, and compute providers can monetize idle capacity.

The promise of future revenue (via the token) lowers the upfront cost of building robust datasets.

Vana provides a working example. Its DataDAOs use token incentives to attract participants while relying on smart economic design to keep things stable. Emissions taper over time, and its “burn-on-access” model sinks token supply, helping manage inflation and maintain long-term value for contributors.

On-Chain Provenance and Smart Licensing

Blockchains provide trust rails for data ecosystems.

While the raw data itself typically lives off-chain, the chain records who contributed what, when, and under what terms. This creates verifiable provenance, a necessary foundation for downstream attribution.

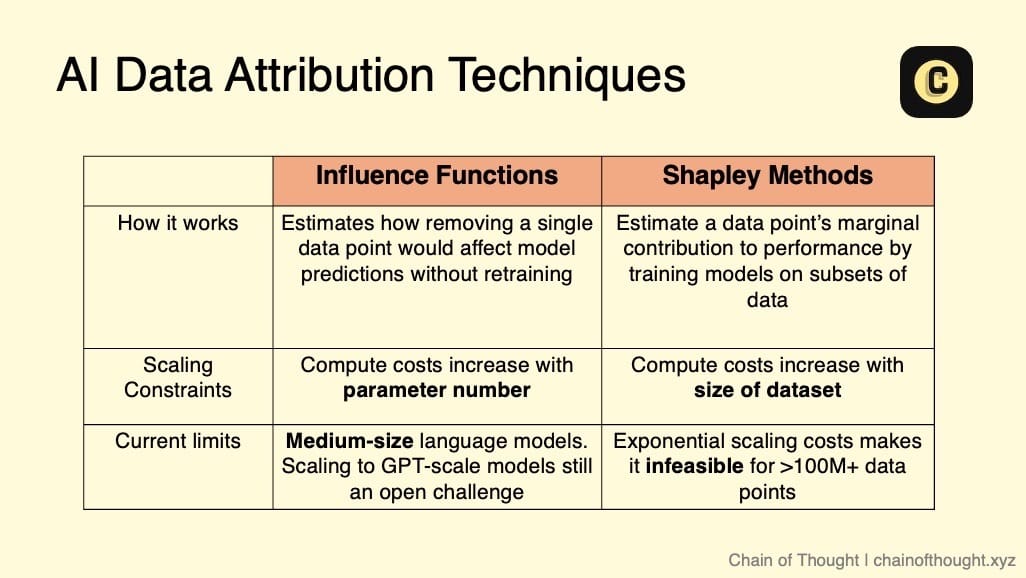

Next, how do you technically prove that a specific dataset improved a model’s performance? The short answer: it’s still a tough problem. Tracing the impact of data inputs on model behavior requires fine-grained tracking systems that are either in early research stages or limited to narrow domains. Techniques like influence functions or Shapley methods (see table above) have scaling constraints and are not suitable for large models.

The challenge runs deeper than infrastructure. Not all data holds equal value. A single, well-documented case study on a rare disease might contribute more than thousands of generic images. But how do you quantify and reward that kind of asymmetry?

Flat, volume-based payouts are easy to implement, but also easy to game. Meaningful attribution demands more sophisticated metrics: how unique is the data? How contextually relevant? What’s its real-world impact? Building systems that can evaluate these factors transparently is still an open frontier.

Where provenance can be enforced, smart contracts close the loop. Licensing terms are embedded directly into code. Royalties can be automatically routed to contributors whenever a model is deployed, queried, or monetized.

In this way, crypto transforms data from a static asset into a programmable economic resource.

DAO-Governed Data Commons

Finally, tokenized data systems also unlock collective governance. Contributors become stewards. Token holders vote on who gets access, under what terms, at what price, and how quality is maintained.

Here, the data is not just a personal asset, but a community resource.

Business Model: Raw Data Isn’t Enough

Now the fun part: let’s talk business.

We see a common pattern across the space today: many Web3 teams are obsessed with data collection, but very few know how to turn that data into a real business.

They struggle to articulate how those datasets translate into revenue. The result is often a vague pitch built on speculation.

This mirrors the “build it and they will come” mindset. It rarely works.

To monetize data, you need commercial instincts. What use case is the data powering? Who pays for it? How often? And what else do they need that only you can provide?

You don’t need to train a foundation model to win. You don’t even need to touch the AI stack. You just need to position the data where it matters.

DLP Labs licenses structured vehicle telemetry (battery health, mileage, GPS trails) to insurers and EV ecosystem partners. The value is immediate: better pricing, better policies, better programs. That’s a data business.

The Cost That Kills Most Projects: DAC

One way to think about this is through Data Acquisition Cost (DAC):

What’s the cost of collecting enough high-quality, targeted data to make it valuable?

Apps that purely exist to collect user-generated data often face steep DACs, as they have to offer heavy incentives to bootstrap data contribution.

Two brutal questions arise:

Incentive Sustainability: Will the token economy survive beyond the airdrop era?

Value-to-Cost: Does the lifetime value of the data justify what you paid to get it?

In many cases, no.

The best data businesses don’t ask users to contribute. They build products people want to use, and collect data as a side effect. This radically improves the unit economics, driving DAC toward zero.

Tesla’s Full Self-Driving (FSD) is the canonical case. Users pay for the car. The car generates data. That data loops back to improve FSD, which sells more cars. DAC approaches zero. The data flywheel spins.

In Web3, the strongest data networks will follow a similar logic: lead with utility, not raw incentives. If the product stands on its own, data generation becomes an organic outcome. I like the analogy of a Trojan horse.

Move Up the Stack: Where the Margins Are

Selling raw data is a lower-margin business and quickly becomes commoditized.

The real leverage comes from building on top of it:

Data Pipelines for Specialized Model Training: AI companies need curated data ready to plug into specific AI training workflows. Edge-case radiology scans, regional dialects, rare contractual disputes—these are the kinds of datasets that can move the needle. Networks that deliver this type of structured, provenance-rich data can become essential infrastructure in the AI development loop.

Proprietary AI Applications Built on Exclusive Data: If your network collects unique data, you’re in a position to build applications others simply can’t. Think of intelligent agents tailored to specific industries, trained on interaction patterns you alone can access.

Building Open Data Platforms: Long-term, the biggest opportunity lies in enabling others. Open, composable data ecosystems where developers can tap into shared datasets with baked-in attribution and revenue splits create surface area for emergent use cases. You don’t need to anticipate every use case. You just have to make them possible.

We’re already seeing this materialize. Skyfire is building AI agents powered by real-time vertical data. Others are aggregating biometric streams from wearables. Still more are curating expert datasets in fields where generic models consistently underperform: medicine, law, and scientific research.

💡 One interesting observation:

Data buyers are often enterprises and remain wary of working with Crypto companies. We’ve heard of teams having to scrub out all references to crypto in their materials/socials to get through procurement.

Open Challenges

Building decentralized data networks means grappling with a series of hard, unresolved challenges to scale to millions.

1. The Value Paradox: Who Goes First?

Early data networks face a catch-22: contributors want rewards, but data buyers want scale before they commit and sign commercial agreements. You can’t sell access to a dataset that doesn’t exist, and you can’t build one without your contributors. Escaping this cold start requires clear narratives and inventive bootstrapping. Most projects underestimate just how long that takes.

2. Sybil Resistance and Data Quality

When incentives appear, so do exploiters—spam, fake users, low-effort uploads. If volume is rewarded over quality, networks degrade fast. Projects like OpenLedger are experimenting with stake-based validation, where contributors risk losing tokens if their data is flagged. Others rely on reputation scoring. There’s no universal fix, but strong verification is essential to maintain trust.

3. Privacy

Without privacy, trust breaks down. Storing raw data on-chain isn’t viable. The solution lies in off-chain encrypted storage, permissioned access via smart contracts, and cryptographic verification.

A growing stack of Privacy-Enhancing Technologies, like TEEs, ZKPs, FHE, and federated learning, makes this architecture feasible.

The core idea: private custody, public verifiability.

4. Discovery

As DataDAOs multiply, discovery becomes a bottleneck. Developers need tools to search, compare, and remix datasets easily. The missing layer is data-native middleware—a kind of GitHub for datasets, with semantic search, APIs, and composability baked in.

Zooming out: The most effective networks won’t try to do everything. They’ll zero in on domains where centralization falls short and users have clear reasons to opt into something better, such as open science, medical research, and education.

What could the future look like?

Now this is my favorite part, because I get to sit back and speculate about the future.

Composable Data Networks

As data becomes tokenized, entirely new markets start to form. Data tokens can be held, traded, or used as collateral.

A hedge fund might take a position on a “Synthetic human genomics” dataset, anticipating demand from biotech labs.

An AI startup might stake its DataDAO tokens to borrow compute.

With shared standards and interoperability, we could see open data exchanges where public, private, and synthetic datasets interact seamlessly. And priced in real time.

Real-Time Data and Feedback Loops

I get frustrated when the AI insists that my dates are wrong simply because they are stuck in the past.

That’s about to change. The next wave of AI systems will learn continuously, adapting in real time. Grass is already integrating live data feeds. Masa pulls updates directly from the web. Dria is building infrastructure to generate synthetic data on demand.

Together, they’re laying the foundation for closed-loop AI systems: models that pull in fresh context as needed, retrain instantly, and evolve continuously, without waiting for the next big update cycle.

AI-Manages-AI

As networks mature, AI agents will start managing data flows directly.

A personal AI derived from ORO might handle your data permissions, deciding what to sell, to whom, and at what price. On the backend, AI validators will score incoming contributions, filter noise, and train models on high-quality synthetic inputs.

Eventually, the network won’t just be human. AI agents will enter the loop. An agent could request data, pay for it, train on it, and sell its outputs. All on-chain, without human intervention.

Eventually, we may see autonomous DAOs of AI agents negotiating with each other on-chain, buying and selling data.

Public Infrastructure & Strategic Alliances

Governments and institutions will likely get involved.

Imagine a national research DataDAO where citizens voluntarily contribute health data in exchange for rewards, with strong privacy guarantees. Even cross-network alliances are possible: Grass data integrated into Vana’s user pools, filtered and routed with user consent, blending public and private signals into personalized data layers.

To sum it up: data networks are a step towards a living data economy, where ownership, intelligence, and value flow continuously across humans and machines. Can we manage the risks as agents gain autonomy in this system?

Honestly, I’m still wrestling with that.

Cheers,

Teng Yan

Share your take or a quick summary on X. Tag @cot_research (or me) and we’ll repost it.

P.S. Liked this? Hit Subscribe to get next week’s “Big Idea for 2025” before anyone else.

Bonus: 12 data networks we’re keeping a close eye on

Vana is a decentralized platform that lets users contribute data to AI models while retaining ownership and privacy. Backed by $25 million from investors like Coinbase Ventures, it addresses AI’s data shortage by incentivizing contributions through data DAOs.

OpenLedger is a blockchain network that enables decentralized sourcing, fine-tuning, and monetization of models. It uses Proof of Attribution to ensure fair rewards and offers tools like Model Factory and OpenLoRA to improve transparency and reduce costs in AI development.

DLP Labs: DLP Labs focuses on monetizing vehicle data, such as battery health, mileage, and GPS location, through a blockchain platform, rewarding users with the $DLP utility token. It aims to create a marketplace for real-world driving insights.

Tensorplex Labs is a Web3 and AI startup building infrastructure for decentralized AI networks, including liquid staking solutions like stTAO for Bittensor and platforms like Dojo for crowdsourcing human-generated datasets.

Dria is a decentralized network that allows millions of AI agents to collaborate on generating synthetic data to improve AI/ML models, offering scalable tools for dataset creation without extensive compute resources.

Masa operates as a decentralized AI data network, enabling real-time data access for AI applications through the MASA token. It has raised $18 million across funding rounds, integrating with Bittensor subnets for fair AI, and focuses heavily on X data.

Fraction AI decentralizes data labeling for AI models by creating a platform where AI agents compete to generate high-quality labeled data, incentivized through crypto rewards. It raised $6 million in pre-seed funding from Spartan and Symbolic.

Grass is a decentralized network where users contribute unused internet bandwidth to support AI data collection, such as web scraping, earning rewards in Grass Points convertible to tokens. It has over 3 million users.

ORO is a decentralized platform that allows users to contribute data to AI development while maintaining privacy through cryptography like zkTLS and secure compute environments. It uses blockchain for transparent validation and compensation.

Pundi AI: Pundi is decentralizing AI’s most critical layer: training data. Using on-chain incentives, Pundi AI transforms data labeling and verification into a global, crowd-sourced effort that ensures high-quality data while rewarding contributors.

Reborn is building an open ecosystem for AGI robots, integrating AI and blockchain to manage data, models, and physical agents. It fosters collaboration in robotics with over 50 million high-quality robotic data points and 12,000+ creators.

Frodobots leverages gaming to crowdsource robotics data for embodied AI research and plans to launch BitRobot, a “Bittensor for Robotics” with $8 million in funding, including a $6 million seed round led by Protocol VC.

This essay is intended solely for educational purposes and does not constitute financial advice. It is not an endorsement to buy or sell assets or make financial decisions. Always conduct your own research and exercise caution when making investments.