The Chain of Thought Decentralized AI Canon is my running attempt to chart where AI and crypto collide, overlap, and fuse into something new.

Each Canon zooms in on a different layer of this economy (trust, data, cognition, coordination, robotics) and then pans out to show how they connect into a single system of intelligence and incentive.

The Canons shift as the terrain does. New essays, data, and debates reshape the map every time we learn something worth revising.

Subscribe if you want to follow this live experiment in mapping the intelligent economy.

TL;DR

Compute is emerging as crypto’s next primitive: scarce, verifiable, and accessible without permission.

Decentralized compute networks (DCNs) will fill the gap as AI demand outpaces centralized supply, especially for marginal and cost-sensitive workloads.

DCNs source GPUs from small data centers, crypto miners, and individuals. Competitive pricing and token incentives make them well-suited for inference workloads.

Inference-first platforms like Inference.net and Chutes are gaining ground by offering better performance and usability. General-purpose networks serve broader use cases.

The opportunity is real, but demand is still early.

Most DCNs face five structural hurdles: coordination, developer experience, technical reliability, compliance, and economic sustainability. Fixing these is NOT optional.

Critical use cases: hosting of open-source AI models and truly sovereign autonomous AI agents

Developers still care about two things: cost and reliability. The networks that can abstract away the mess underneath—and prove they work—will win.

Crypto’s first act was money.

Bitcoin made it uncensorable. Ethereum made it programmable. These were breakthroughs, but they also defined the shape of what followed: finance-first systems with logic layered on top.

But what if the next core primitive isn’t a new currency?

This is our next big idea in AI & Crypto:

Compute becomes a new primitive: Scarce, Verifiable, and Liquid.

When we say “primitive”, we mean something raw and enabling. Like land. Or storage. For compute to qualify, it has to break free of centralized coordination and become a resource anyone can access, use, and build on without permission.

Decentralized compute networks will be the place where that shift takes root.

The pressure is coming from AI. Demand is compounding faster than the supply chains can adapt.

Surging Demand for Compute

We’re not just in a GPU cycle. We’re in a global reallocation of compute.

In 2024, NVIDIA shipped more than 3.7 million datacenter GPUs. GB200s & H100s are moving faster than fabs can produce. The company is planning to triple output this year. Demand is still way ahead of supply.

Cloud infrastructure spending hit $330 billion last year. AI is now the primary growth driver for AWS, Azure, and Google Cloud. The economics are shifting upstream. Gartner expects generative AI spending to reach $644 billion in 2025, with the majority going toward infrastructure and hardware.

The physics of scaling is breaking. McKinsey estimates that by 2030, generative AI will require 2.5 × 10³¹ FLOPs per year. That’s an order-of-magnitude event. All of this has to be powered and cooled.

Goldman Sachs thinks global data center power consumption will grow 160% by 2030. The IEA forecasts 945 TWh, nearly Japan’s total electricity usage. And it’s not just watts: it’s land, latency, heat, supply chains, compliance.

Even as unit costs fall—$ per PFLOP (training) or $ per million tokens (inference) are dropping fast—the total demand for compute is ballooning.

Jevons’ paradox shows up again: greater efficiency leads to more use. Better models mean more apps, which means more usage, which means more strain on infrastructure.

Compute is becoming more strategic. And more unevenly distributed. That’s where crypto-native systems start to matter.

Here’s how we see the compute demand curve evolve:

Right now, demand for decentralized compute is small. But we don’t think it stays that way. As pressure builds, the curve shifts. At some point, DCNs start taking a real share of global workloads.

The rest of this piece sketches out why we think that shift is coming.

The Old Model: Compute as a Managed Service

Today you don’t own your compute. You lease it. On someone else’s terms.

If you’re training or running AI models today, where do you go?

Hyperscalers (AWS, Azure, GCP) still control the high ground. They offer scale and tight integration. Large enterprises lean on them for their security, reliability, and deep integrations. For better or worse, they remain the default for most teams.

Neoclouds offer a tactical alternative. Newer companies like CoreWeave and Lambda promise lower costs and faster access, optimized for AI workflows. They run their own hardware, cut out middlemen, and pass the savings along. An H100 that costs $4–$5 per hour on AWS might run at half that on a neocloud. But they are centralized.

Then there’s decentralized compute. I hesitate to mention this in the same list because very few people use this today. Unlike the others, this model lets anyone contribute compute power into a global, permissionless pool. You might end up training your model using resources from a gamer’s rig in São Paulo or a researcher’s workstation in Berlin.

This is where compute starts looking less like a utility bill and more like a primitive.

Decentralized Compute Networks (DCN)

If compute is to become a true primitive, it needs a mechanism that lets it be pooled, priced, and accessed without permission.

Decentralized Compute Networks (DCNs) are this mechanism.

The idea is simple enough: a marketplace where anyone with spare computing power can rent it out to others, without the markups of traditional cloud providers.

Supply comes from three primary sources:

Small and mid-sized data centers equipped with enterprise-grade GPUs (H100s+) but limited customer reach

Former crypto miners with warehouses of idle GPU rigs.

Individuals offering up spare capacity from gaming setups or professional workstations.

The core advantage is price. Unlike fixed-rate hyperscalers, decentralized networks rely on competitive bidding. Pricing adjusts in real time. Providers compete for jobs. That alone drives costs down. Add token incentives, and the economics shift even further.

The result:

GPU time can be 20 to 80 percent cheaper than AWS

Token rewards help subsidize usage and bootstrap liquidity on both sides

For startups and independent teams, the delta is more than just savings. It’s access, scalability, and a much-needed escape valve from hyperscaler constraints.

The model is already gaining traction:

On the lower-end GPU side, Spheron has aggregated 500K CPU threads and 7K GPUs.

Render Network is supplying compute for Stability AI’s training runs

Inference.net (formerly Kuzco) and Atoma are targeting inference workloads as lower-cost, decentralized alternatives to OpenAI.

General-purpose DCNs like Ionet, Akash, and Spheron go broad. They offer compute for everything from web hosting to gaming to AI. Versatile, but not specialized.

Inference-focused DCNs like Inference.net (doh!) and Chutes take a narrower path. Their entire stack is optimized for a single type of workload: running AI models with low latency and predictable cost.

General Marketplaces | Inference Marketplaces | |

Focus | Provide compute for various applications (AI, gaming, hosting, etc.) | Specialized for AI inference |

Target Use Cases | Serve businesses needing diverse compute | Deploying and running AI models |

Optimizations | Offer generic hardware/software configurations; may require setup for AI | Provide APIs, tools for AI frameworks, and optimizations for low-latency inference |

Cost & Efficiency | Cost-effective for general tasks; may not optimize for AI inference specifically. | Likely offers better cost-performance for AI inference. |

Inference, not training, is the beachhead

If compute is going to establish itself as a true primitive, it needs a practical entry point. Inference is that entry point.

Training still depends on tightly-coupled, high-bandwidth environments of data centers, a hard constraint for anything aiming to be decentralized.

Inference is a different story.

It’s lighter. More forgiving. Less sensitive to hardware differences. Once a model is trained, the challenge becomes throughput, not coordination. That opens the door to a more flexible architecture.

You don’t need NVLink. You don’t need custom fabrics. Inference can run on a workstation, a few older GPUs, or even a high-end laptop. Especially as smaller, distilled models become more capable.

Three factors are fast converging:

Consumer hardware is good. Optimized models can run inference on gaming rigs or even mobile devices. The latest Apple series of Macs with the M4 Pro chip is really good for running models.

AI models are getting smaller yet better

Edge inference is growing. For real-time applications like AR, gaming and personal assistants, local compute reduces latency and keeps data close.

New reasoning models are pushing this shift even faster.

OpenAI’s o1 and o3 systems generate reasoning traces alongside outputs, inflating both token counts and GPU time. A single answer now consumes orders of magnitude more compute. Early estimates suggest o1 costs 25 times more to run than GPT-4.

That changes the economics. As demand scales, so do costs. Centralized inference starts to show strain, while decentralized networks are showing promise:

Exo Labs runs Llama 3 models with 450 billion parameters across MacBooks and Mac Minis.

Inference.net serves inference at up to 90 percent lower cost than traditional providers like Together.ai.

Inference workloads are frequent, distributed, and expensive. That combination makes them the perfect wedge.

The DCN market is already adapting to this strategic focus.

🦄 Not all compute is equal!

We throw around terms like GPUs and FLOPs as if they’re interchangeable. But the context matters.

A model might run the same way on AWS and on a decentralized network. The output looks identical. But the trust model underneath is completely different.

Decentralized compute offers guarantees that hyperscalers can’t: resistance to censorship, user-controlled data, and infrastructure that operates without a single point of control.

That should come with a premium. Today, it doesn’t. Most developers still optimize for price, not control.

That will change. As regulatory pressure grows, and as more workloads need guarantees that central providers can’t offer, the value shifts. Slowly, then structurally. And eventually, so does the pricing.

But: Demand is Lacklustre Today

Supply isn’t the issue. Token incentives do a decent job attracting providers. The harder part is getting serious AI teams to trust these networks with production workloads.

Here’s a snapshot of how a few compute networks are performing, using actual spend (demand) as the baseline.

Akash

Akash has a daily USD spend on compute jobs of $11K (or ~$4M annualized). Akash charges a fee for every action on the network.

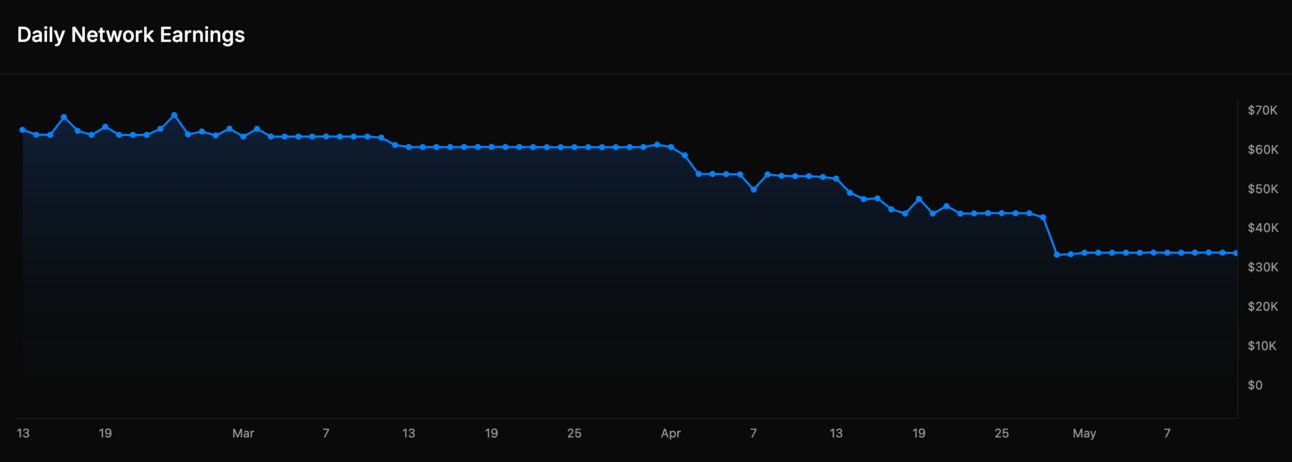

Io.net

Io.net has daily network earnings of $33K for compute providers (or ~$12M annualized). IO charges 2% on USDC payments

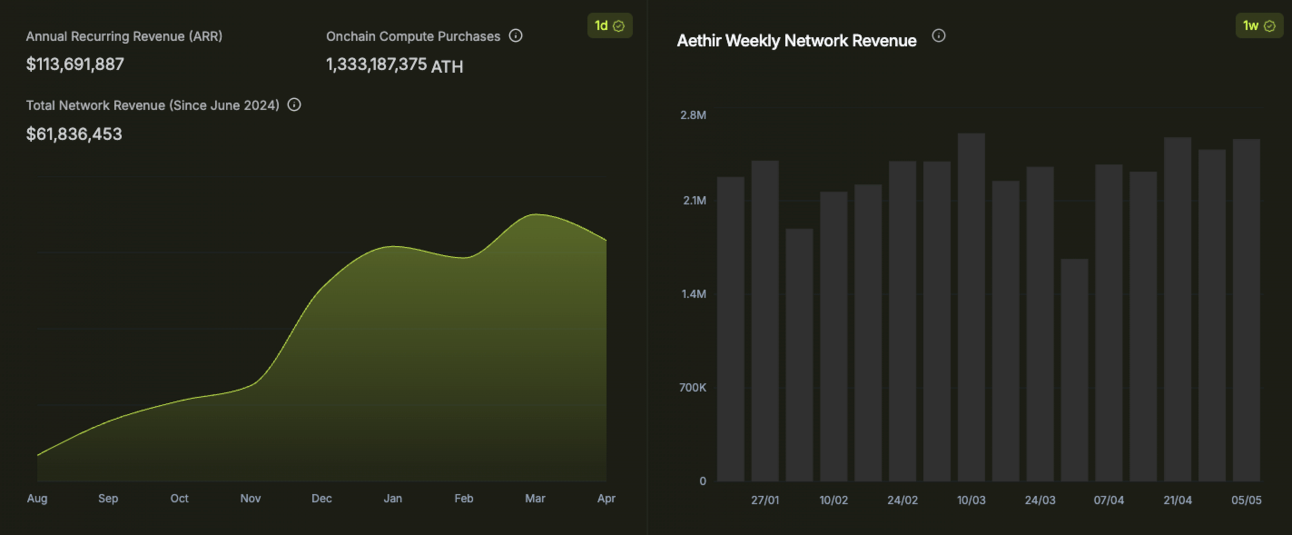

Aethir

Aethir appears to show strong demand for compute with ~$2M in weekly revenue (~$110M annualized). None of this is verifiable on-chain, though. From what we understand, Aethir focuses on matching supply to enterprise clients.

Spheron

We want to commend Spheron for putting its protocol revenue metrics on-chain and verifiable. Every DCN should be following their lead.

From 28 April to 19 May, the protocol has cumulatively earned $18K (~$300K annualized).

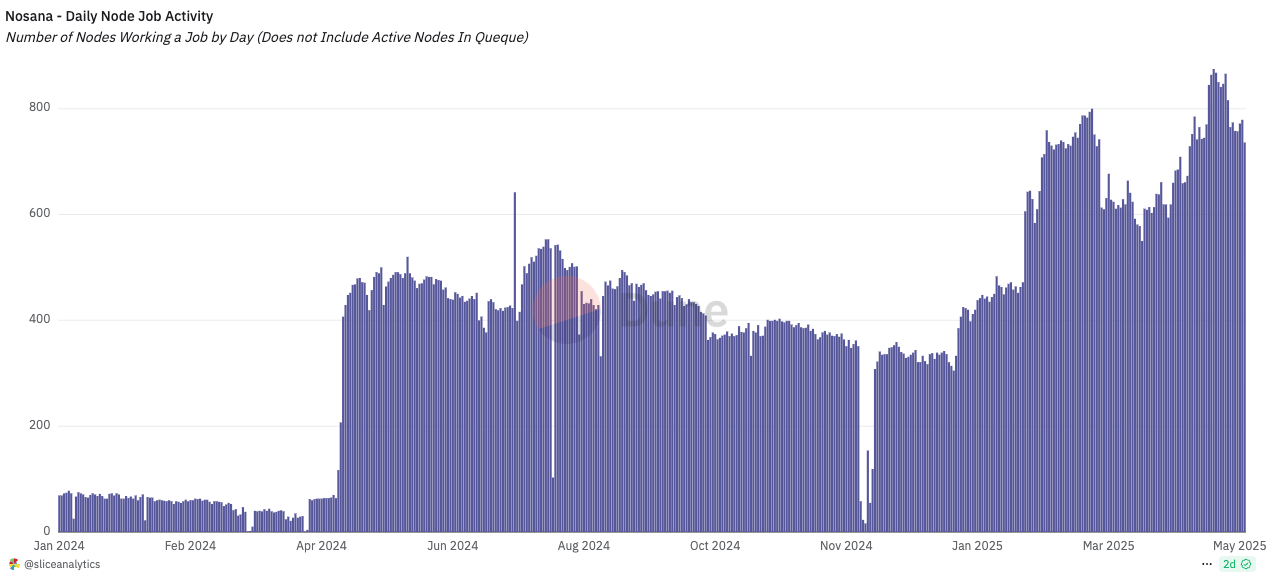

Nosana

Nosana shows a growing number of daily nodes with a working job, although the base is still small (<1,000 nodes).

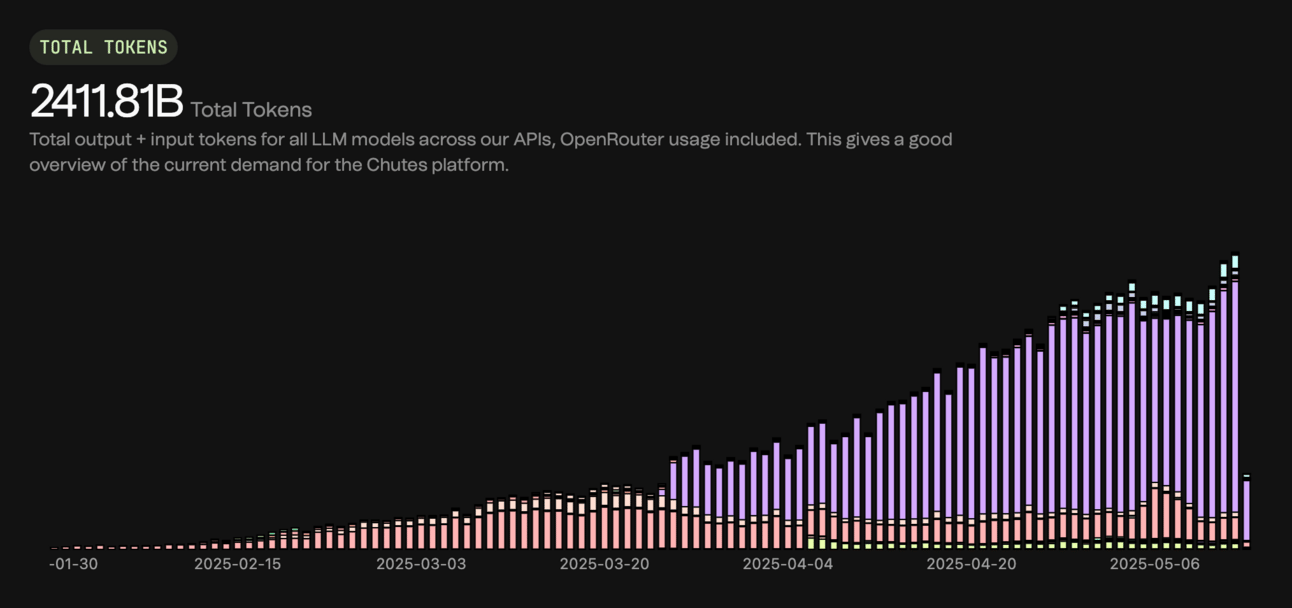

Chutes

Chutes, an inference network on Bittensor, serves ~67B tokens on a daily basis. Assuming an average pricing of $0.30 per million tokens, that’s $20K/day ($7M annualized)

So you can see that the current revenue for decentralised compute networks is just a tiny drop in the ocean relative to global compute demand.

We can infer two things from this gap:

There are real barriers to adoption, even though they are cost-competitive.

Even modest gains in their market share of global compute demand (e.g. few %) would translate to multiple revenue expansions, since they’re starting from such a low base.

Despite this, tokens for some of these networks already trade at nine-figure valuations. Investors are pricing in future capture, expecting these networks to expand significantly in the coming months.

Where DCNs Sorely Need to Improve

Adoption remains slow, especially among enterprises, which account for most of the demand for compute.

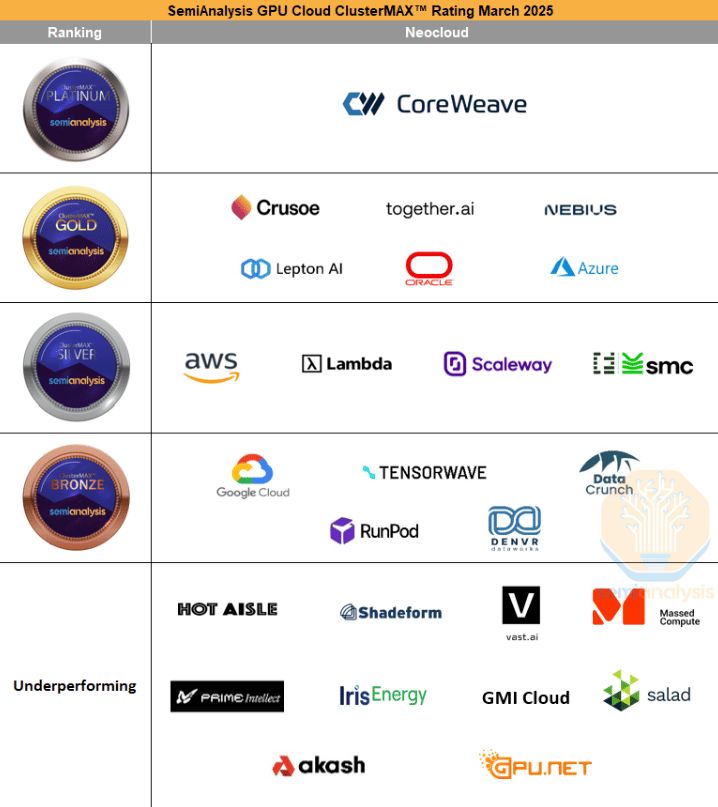

In Semianalysis’ recent GPU Cloud rankings (March 2025), only two decentralized platforms—Akash and Prime Intellect—appeared at all. Both were listed at the bottom, marked as underperforming. The rest didn’t even make the cut.

That’s a useful reality check. It also highlights how early the sector still is, and how much room there is to improve and grow.

If we were to sum up the 5 key challenges limiting demand of DCNs today:

Coordination/Orchestration

Subpar Developer Experience

Poor Security & Technical reliability

Trust & Compliance

Economic sustainability

1. Coordination Is the Constraint

According to Semianalysis, ~90% of enterprise teams prefer Kubernetes for inference workloads. Half use Slurm for training. The top GPU providers know this, and they’re winning by offering fully managed, production-ready environments for both.

DCNs need to do the same.

Hyperscalers and neoclouds have an advantage here. They control their data centers. They manage the hardware and the networking. Decentralized compute networks don’t have that luxury.

On paper, the model looks simple: plug into idle supply, route jobs, reward contributors. But in practice, it’s less about liquidity and more about orchestration.

Having a few thousand GPUs in the network looks good on a dashboard. But turning that into usable infrastructure requires much more than availability. You need those machines to behave like a coherent system, not a collection of parts. At scale, that’s where things break.

Three things need to work in sync:

Discovery and matchmaking between job requests and compatible hardware

Payment and incentives, often tokens or stablecoins routed through smart contracts.

Workload orchestration, including data transfer, error handling, and node reliability

Most infrastructure is built on the assumption that machines are known, trusted, and consistent. That falls apart when every node is an edge case.

Even in tightly controlled environments, this is hard. Kubernetes came out of over a decade of internal work at Google. Running it at scale still requires active engineering. Now imagine trying to coordinate AI inference across a fleet of machines you don’t own, run by people you’ve never met.

The cracks show quickly. Sync issues slow everything down. Faulty nodes silently fail. And verifying that a node actually did the work it claims? That’s still unresolved.

That’s why many teams are quietly reintroducing some degree of centralization. A designated scheduler. A curated node pool. A fallback system for when things break. It’s not a philosophical win, but it keeps the system running. And most users would rather have rather have stability than ideology.

If a decentralized network ever does build a Slurm-compatible orchestration layer (with node staking, workload replication, and fault-tolerance baked in), it would be a breakthrough.

Solve orchestration, and decentralized compute becomes a viable substrate.

2. Security & Technical Reliability

When multiple customers use the same GPU hardware, it’s crucial to keep them isolated from one another so no one can spy or hack into someone else’s environment.

Only a few DCNs have basic SOC2 or ISO 27001 security certifications. That alone makes them a non-starter for many enterprises.

Centralized providers benefit from fast interconnects, handpicked hardware, and full control over every layer. Decentralized systems operate without those advantages.

Networking and storage are common pain points. Many DCNs fall short on speed and lack optimized storage paths. Few have proper active and passive health checks in place, so it’s hard to guarantee that GPUs are functioning efficiently at any given time.

The result is uneven performance. Uptime varies. Latency spikes. Bandwidth becomes a constraint. And training jobs can fail without warning or clear diagnostics.

Integrity remains a live question. If you send data to a third-party node, how do you know the result is valid? How do you prove the compute was actually performed?

There are a few paths being tested. Trusted execution environments can encrypt the workload itself. Reputation and staking models try to align incentives and punish bad behavior.

3. Developer Experience

DCNs are taking a different approach, but need to make sure things are simple to use

The mental model should be: spin up, serve, scale. That’s how AWS works. Most decentralized platforms aren’t there yet.

Tooling is fragmented, on-chain payments confuse new users, and migrating data from centralized clouds can rack up hidden costs. None of this helps adoption.

Real usability means:

Instant compatibility with PyTorch, TensorFlow, and Hugging Face

Clean, intuitive interfaces

API access and compatibility with OpenAI APIs

Without this, the cost savings stay theoretical for most developers.

This is improving. Io.net and Spheron now offer more polished developer dashboards. Atoma and Inference.net expose OpenAI-compatible APIs so developers can swap in decentralized inference with minimal code changes.

4. Trust and Compliance

Larger organizations want formal Service-level Agreements (SLAs) with 99% uptime, legal accountability, and enterprise-grade customer support.

Data compliance adds another layer. If a network can’t offer clear policies around data handling, especially GDPR or HIPAA in affected regions, enterprises won’t take the risk.

Some networks are trying to adapt. Aethir, for example, is building infrastructure with provider KYC and audit trails to make compliance possible. That helps build credibility, but it also chips away at decentralization. This is the core tension. Networks will have to pick a lane, or at least make that tradeoff legible to users.

5. Economic Sustainability

Right now, the engine for DCNs is token incentives. Networks subsidize participation with points or tokens to grow fast. It works…until it doesn’t.

When token prices fall or emissions dry up, supply vanishes. That fragility won’t support real workloads. Durable networks need demand that persists without incentives, providers who stay online for real cash flow, and a token model that doesn’t destabilize the entire system every six months.

Some protocols are starting to rework incentives. Most haven’t figured it out yet.

The Silver Lining

Decentralized compute doesn’t need to out-engineer AWS. It just needs to be good enough: cheap, usable, and reliable within a margin.

Today, DCNs are best suited for hosting open-source models, where flexibility and price matter more than tight integration.

For teams running inference or batch jobs, price is often the limiter. If a platform delivers stable performance and easy integration at lower cost, it sticks. Once it’s in the workflow, switching becomes inertia-bound.

So the immediate goal for DCNs is simple:

Be usable. Be reliable. Be cheaper.

With the right momentum, decentralized compute could scale quickly. Two shifts will: push adoption forward.

First, new use cases. If DCNs can support multi-node, decentralized training with minimal overhead, that unlocks one of the hardest problems outside centralized clouds. Autonomous AI agents will also need constant access to compute. DCNs are a natural fit.

Second, inference demand is already outpacing training. As AI shows up in more apps, inference volume keeps climbing. Centralized infrastructure may not keep pace. That opens the door for cheaper, more flexible options, where decentralized compute has the edge.

Glimpsing the Future Ahead

Jensen Huang has said AI demand could rise by a factor of a “billion”. That’s not hyperbole. It’s a directional bet for his entire company.

The next phase of AI will demand more compute than any single provider can supply. Even with NVIDIA scaling production, the need for GPUs will likely continue to outpace availability, driven by AI’s expansion across every sector.

This isn’t new. Every compute cycle—mainframes, personal computers, mobile—hit the wall on centralized infrastructure. The response has always been the same: distribute it. Build new incentives. Let markets coordinate.

DCNs are in position to absorb some of that overflow. Not all of it, and not everywhere. But they can serve the margins: workloads hyperscalers can’t support, or won’t price competitively.

A hybrid future seems likely. A few decentralized platforms find product-market fit for specific jobs. They compete, or integrate, with traditional cloud providers.

Will the market stay fragmented? Or will a meta-platform emerge to abstract multiple networks into a single interface? History suggests aggregation wins eventually. But right now, the field is still open.

🍎 What to watch as Compute becomes the next primitive:

Are networks showing verfiable on-chain revenue, not just listing idle GPUs?

Who’s publishing NCCL benchmarks or outlining SOC 2 timelines? That signals real attention to performance and security.

Is the developer experience getting better with clean UIs and better orchestration?

Are they starting to win enterprise trust with SLAs and uptime guarantees?

Ultimately, most developers care about two things: cost and reliability. Everything else is friction. The networks that can hide the complexity will win.

One thing hasn’t changed: supply is easy. Demand is the hard part.

Cheers,

Teng Yan

Share your take or a quick summary on X. Tag @cot_research (or me) and we’ll repost it.

P.S. Liked this? Hit Subscribe to get the next Big Idea before anyone else.

Bonus: 10 Decentralized Compute Networks we’re keeping a close eye on

Spheron offers programmable GPU clusters that can spin up containers for LLMs or agent back-ends in minutes, charging in USDC while rewarding node operators in its native SPE token. The protocol positions itself as a cheaper alternative to hyperscalers for autonomous-agent compute pipelines

→ We wrote a deep dive on Spheron here

Chutes is a Bittensor subnet focused on serverless AI compute and high-throughput inference, letting builders deploy open-source models without managing infrastructure. Its operators, managed by Rayon Labs, regularly rank among the top TAO-earning miners across all Bittensor subnets.

Hyperbolic is building an “open-access AI cloud” by federating idle GPUs and exposing them through a serverless inference layer. Users can launch jobs or resell capacity, while the platform handles scheduling, payments, and reputation scoring

Atoma is building a decentralized hyperscaler for open-source AI on the Sui blockchain. It offers private, verifiable inference using Trusted Execution Environments, with OpenAI-compatible APIs and SDKs for integrating scalable, privacy-preserving compute into Web3 apps.

→ We wrote a deep dive on Atoma here

Bless Network turns idle consumer devices into a globally shared edge computer, rewarding users for donating GPU cycles to AI and data-processing jobs. It’s a newer entrant, with a lightweight browser extension that makes sharing compute almost frictionless.

Akash Network runs a permissionless marketplace where cloud providers auction surplus CPU / GPU capacity and developers deploy Docker containers at prices often below AWS spot rates. The chain’s on-chain bidding and settlement flow lets workloads migrate automatically to the lowest-cost node while providers earn AKT for uptime and performance.

io.net aggregates idle GPUs into a Solana-based “Internet of GPUs” that AI teams can rent through an API resembling traditional cloud SDKs. Recent funding by Hack VC and Solana Labs backs its plan to scale toward tens of thousands of nodes for large-scale training and inference.

Aethir provides a distributed GPU cloud aimed at real-time workloads like AAA cloud gaming and generative-AI inference, routing jobs to the nearest edge node for lower latency. Its architecture pools enterprise-grade NVIDIA cards from data-center partners and community hosts under a single pay-as-you-go interface.

Inference.net (previously Kuzco) operates a distributed GPU cluster on Solana where contributors earn KZO points for serving LLM inference workloads via an OpenAI-compatible API. The network reports thousands of active nodes and has doubled daily payouts since mid-2024 as more GPUs come online

Nosana connects AI teams to thousands of GPUs worldwide through a Solana-based marketplace that auto-matches jobs with the cheapest available node. Its roadmap includes inference-specific optimizations and on-chain telemetry so users can audit performance and reliability scores before renting.

Thanks to Prashant from Spheron for reviewing and sharing his feedback.

This essay is intended solely for educational purposes and does not constitute financial advice. It is not an endorsement to buy or sell assets or make financial decisions. Always conduct your own research and exercise caution when making investments.