The Chain of Thought Decentralized AI Canon is my running attempt to chart where AI and crypto collide, overlap, and fuse into something new.

Each Canon zooms in on a different layer of this economy (trust, data, cognition, coordination, robotics) and then pans out to show how they connect into a single system of intelligence and incentive.

The Canons shift as the terrain does. New essays, data, and debates reshape the map every time we learn something worth revising.

Subscribe if you want to follow this live experiment in mapping the intelligent economy.

Let’s begin with our view:

Decentralized training is the most ambitious moonshot in AI x Crypto right now.

It challenges the assumption that only a few well-funded labs can build and control large models.

If it works, it weaves cryptography and blockchains directly into the foundation of the AI stack. At that point, the rest of the world has to pay attention.

We will explore 2 core ideas here:

How large AI models can be trained across decentralized networks, and why that matters

Tokenization of AI models

We’re moving into a phase where you don’t just use an AI model. You can help train it. You can own a piece of it. On-chain, with others.

The chart above shows several decentralized training runs, each using different datasets and with different goals. It’s not a direct comparison (different model types and setups), but the overall trend is clear.

Model size is scaling steadily, and the curve is moving up and to the right.

Small note: Most of them still rely on whitelisted contributors, so they aren’t fully open or permissionless yet.

Part I: Decentralized training

The core idea is simple: Building frontier-scale models without relying on centralized infrastructure.

Instead of routing everything through a single, trusted compute cluster, training is distributed across a permissionless network, where coordination, communication, and trust become first-class problems.

Sam Lehman from Symbolic Capital makes the distinction clearly in his article on decentralized training:

“Truly decentralized training is training that can be done by non-trusting parties.”

So… “non-trusting parties” is the really important and complicated bit.

In true decentralized training, any node can join the training run. It doesn’t matter whether it’s a rack in a data center or a single GPU in your home basement.

Recently, a surge of new research and builder energy is pushing the limits of what decentralized training can achieve.

What Has Happened in the past 3 months

Nous Research pre-trained a 15B parameter model in a distributed fashion and is now training a 40B model.

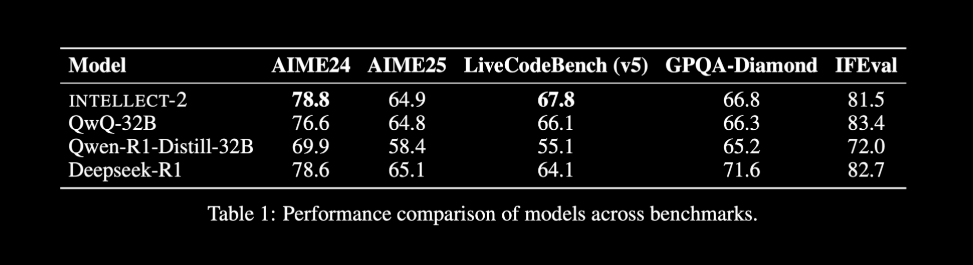

Prime Intellect fine-tuned a 32B Qwen base model over a distributed mesh, outperforming its Qwen baseline on math and code.

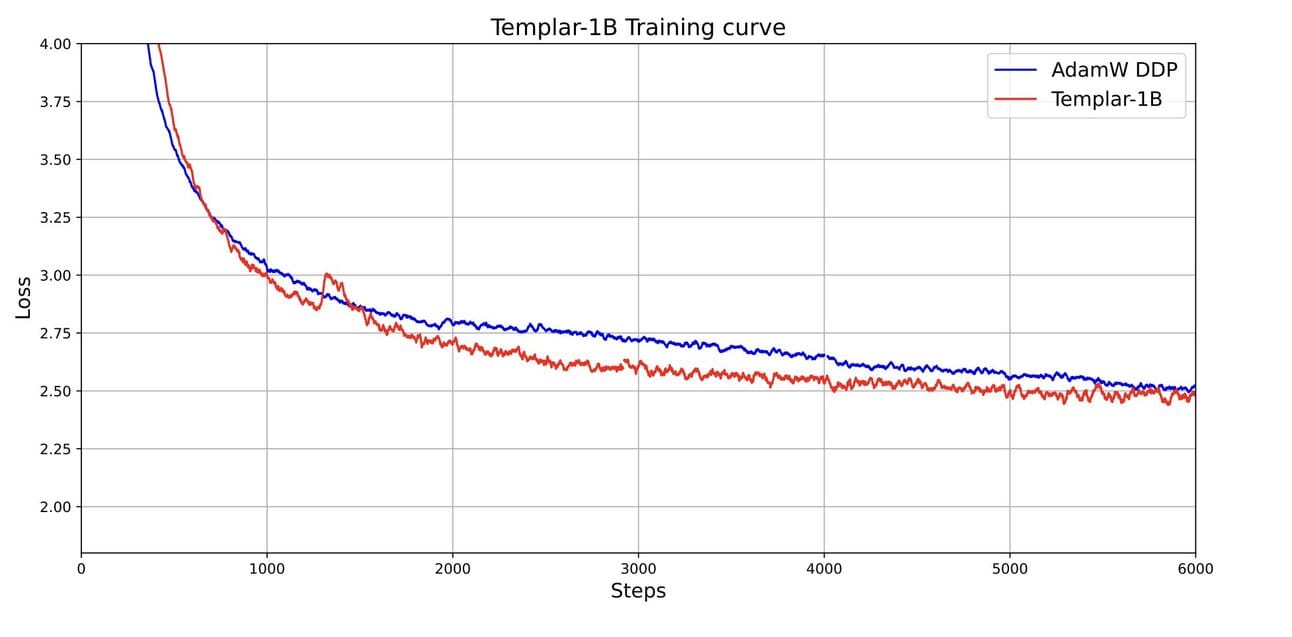

Templar trained a 1.2B model from scratch using token rewards. Early-stage loss was consistently lower than centralized baselines.

Pluralis showed that low-bandwidth, model-parallel training (once thought impossible) is actually quite feasible.

These wins remind us that decentralized training is no longer a thought experiment.

So far, progress has been around the 10 to 40 billion parameter range, and suggests that we are hitting the limits of what data parallelism can efficiently achieve over open, decentralized networks.

Scaling beyond this range, toward 100B or 1T+ parameter models trained from scratch, will likely depend on model parallelism, which comes with an order of magnitude harder challenges.

To understand what’s holding back larger runs, we need to unpack the three main constraints and how parallelism is used.

The Holy Trinity of Decentralized Training

To scale, decentralized networks need to solve what we call the “holy trinity” of design constraints:

1. Coordination

Who decides what each node trains, where, and when? How do you handle nodes dropping in and out?

In centralized training, coordination is simple. A scheduler decides what gets trained, on which machine, and when. If a node crashes, the system either restarts or reroutes. Everything assumes reliability and control.

Decentralized training doesn’t have that luxury. Nodes are scattered across networks, running on different hardware, and prone to dropping in and out at any time. This is one of the core challenges decentralized training protocols are solving.

Prime Intellect’s Orchestrator (https://www.primeintellect.ai/blog/intellect-2-release)

Prime Intellect’s orchestrator tackles this by assigning tasks across a global mesh, tracking participation, and recovering from node failures without restarting the entire system. It works alongside PCCL, their communication layer that keeps machines in sync even when some go offline. New nodes can join in mid-training, fetch the latest state, and start contributing without slowing down the rest of the network.

Nous Psyche follows a similar logic. The coordinator acts as an authority for the state of the training run and lives on-chain in a smart contract. Nodes join and leave in-between epoches, and the system reshapes itself around what’s available in real time.

The degree of decentralization of the coordinator itself can vary and remains a key design choice. Not every project leans on central coordination, which opens the door to critiques that the system isn’t truly decentralized.

GenSyn takes a peer-to-peer approach. With their RL swarm, nodes train their own base models while still influencing one another. They can learn from other models and shape other models’ learning as well. Eventually, the best ones can be selected or distilled. In future iterations, they may enable direct exchange of gradients.

In all cases, the core challenge is the same: how to keep training coherent when the system is inherently unstable. Every solution balances structure and flexibility in a different way.

2. Verifiability

How do you know a node did the work correctly and is not malicious?

A core assumption in decentralized training is that nodes are untrusted.

Any computer can join, and some might behave maliciously: submitting fake updates, injecting poisoned data, or simply idling while pretending to contribute. The system needs a way to verify that every participant is doing valid work, without requiring a central authority to watch over them.

The most basic approach is redundant computation. Assign the same task to multiple nodes and compare the outputs. If they match, assume correctness. This works, but it burns unnecessary compute and scales poorly.

More advanced methods attempt to use lightweight, layered verification schemes.

Prime Intellect’s TOPLOC is one example. It uses locality-sensitive hashing (LSH) to generate a compact fingerprint of each model output. Instead of re-running the full model to check the result, peers can recompute and verify this fingerprint. TOPLOC tracks only the most informative values from the model’s hidden states, making verification up to 100 times faster than a full forward pass.

GenSyn uses a system called Verde for refereed delegation. A verifier checks the output of a node and can challenge it if something looks off. If the two disagree, the system isolates the exact step in training where their results diverge. Only that small step is re-executed by a neutral third party, such as a smart contract or another trusted peer. This minimizes overhead while maintaining integrity.

There’s also a hardware-level complication. Training on different devices—say, an A100 versus an H100—can produce slight numerical differences even when running the same code. Many verification systems fail in these cases because they expect identical outputs. Verde solves this with RepOps, a low-level library that forces bit-for-bit consistency across hardware types. It ensures all nodes run the same math and return identical results, regardless of the underlying architecture.

There is early research into proof-of-learning and proof-of-training-data, where nodes publish cryptographic commitments to intermediate weight states or data samples. These could eventually provide lightweight, trust-minimized guarantees that training steps were performed on valid data.

While systems like TOPLOC and Verde provide promising foundational ideas, they currently operate more like proof-of-concept scaffolds than fully hardened security architectures. The core issues are structural:

It’s cheap to submit junk.

It’s expensive to validate, prove, and escalate challenges.

Coordination among defenders is difficult in open networks.

3. Performance over the open internet

How do you maintain speed and reliability outside data centers?

Training large models depends on fast communication and tight coordination. In centralized settings, that’s handled with high-speed interconnects like NVLink and InfiniBand, which can reach up to 900 GB/s and 200 GB/s, respectively. Hardware is co-located, and thermals are controlled. Everything is designed to stay in sync.

None of that applies in decentralized environments.

Nodes are scattered across continents, running on residential Wi-Fi or variable cloud infrastructure. Internet bandwidth may be as low as 50-200 MB/s (1000x slower). Latency is unpredictable. Hardware is inconsistent. Failures are common.

This creates a fundamental bottleneck. Training is inherently iterative. Gradients must be shared, and timing kept consistent. A single slow or unstable node can stall the process or take the whole run down.

As Alexander Long (Pluralis) puts it:

“Is decentralized model-parallel solved → NO. The communication bandwidth is so much worse compared to a datacenter, that even 90% is not enough. We need to get to around 300x compression to hit parity with centralised training. There remains a huge question if this is even possible - you are destroying so much of the training signal by doing this.”

This is where most of the recent research effort has gone: making communication efficient enough to work over the open internet.

There are a few ways we can get there:

Train more before syncing. Instead of syncing updates every step, nodes train locally for 16, 32 steps or more, then sync. That gives them more time to send data, so they don’t need to compress it as much. Open DiCoLo from Prime Intellect reports a 500x reduction in communication overhead with no impact on model convergence.

Send only the important data. You don’t need to send all the training updates. Most updates are close to zero. With algorithms like DiLoCo or DeMo, you just send the top 0.1–1% of values (the ones that matter most).

Use mathematical compression tricks. Some systems use transforms (like converting numbers into frequencies) and only send the most important parts. Others use low-rank approximations. These reduce how much you need to send while keeping the results accurate.

Let’s illustrate (2) and (3) using DeMo as the example. DeMo is an optimizer:

Each GPU keeps a “momentum”, like a running average of past updates.

Instead of sending the full momentum (which is huge), the GPU runs a Discrete Cosine Transform (DCT) on it. This converts it to the frequency domain.

Then, it picks the top-k most important components.

These few numbers are sent to the other GPUs.

Each peer then uses this small set of numbers to reconstruct an approximation of the original momentum.

In some experiments, this cuts bandwidth usage by up to 10,000 times compared to naïve approaches.

So far, most communication-efficient techniques have focused on data parallelism, where the main challenge is sharing weight gradients.

Model parallelism is much harder. It requires even more aggressive compression because it must also transmit activations (intermediate outputs passed between layers) on every forward and backward pass. These signals are more sensitive to degradation and need to be sent in real-time, which makes the bandwidth challenge even more severe.

Parallelism

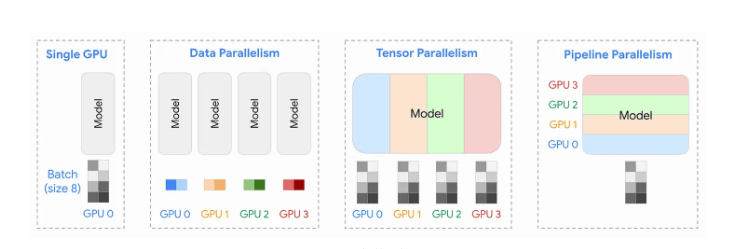

Let’s spend a moment on parallelism, since it’s key to understanding what’s happening under the hood.

In most decentralized training systems, you’ll see them use a blend of parallelism strategies, each with trade-offs around memory, communication, and efficiency.

Data Parallelism

This replicates the full model across all nodes, with each node training on a different batch of data. It is memory-intensive and requires enterprise-grade GPUs, since every node must store the entire model. Communication is relatively light because updates are only synced after each training step.

Scalability becomes a challenge as models grow. Training a 100B+ parameter model this way would probably require very large nodes, perhaps 100xH100s. This naturally excludes many individuals from participating.

Data parallelism has another drawback. Each node holds the full model, which makes privacy and model protection difficult.

Model Parallelism

Here, the model is instead split up among the nodes, and no single node holds all the model weights.

Tensor Parallelism vertically splits the computation within a layer across nodes. One matrix multiplication might span several GPUs, which means they need to exchange partial results constantly. This allows for training larger models, but it introduces heavy, low-latency communication demands.

Pipeline Parallelism assigns different layers to different nodes. Inputs pass forward through the model, then gradients move backward. This spreads memory load and reduces communication requirements, but creates idle time as each stage waits its turn in the sequence.

Techniques like GenSyn’s SkipPipe allow stages of the model to be skipped temporarily if they’re overloaded or down, helping the pipeline continue without waiting. In tests, SkipPipe made pipeline training 55 percent faster and allowed training to continue through node failures up to 50 percent.

To summarize:

Training at scale depends on tight coordination. Without fast, reliable communication, the process starts to break down.

This is where decentralized networks struggle. They lack the fiber interconnects, custom hardware, and uniform conditions of hyperscale data centers. Instead, they run on a patchwork of consumer GPUs and unstable connections spread across the globe.

Incentive Design

Even if you solve for coordination, verification, and performance, decentralized training won’t work without a reason for people to show up. Compute is expensive. Without strong incentives, most won’t bother.

So far, teams like Prime Intellect and Nous Research have leaned on small, aligned communities. Volunteer contributors either support the mission philosophically or are hoping for future tokens. That’s fine for early experiments, but it doesn’t scale to an open, sustainable network.

Templar takes a different approach. It’s a Bittensor subnet focused on pretraining large language models, and by nature, treats incentive design as a first-class problem.

Miners in Templar train on local data, compress their updates into pseudo-gradients, and submit them to the network. Their goal is to improve the model and earn TAO tokens.

Templar uses DeMo (Decoupled Momentum), an optimizer built for compression. It reduces communication overhead enough to make training feasible across slower, unreliable connections.

The core incentive system is called the Gauntlet. It’s a scoring mechanism run by validators. Each submission is evaluated on:

How much it improves the model (loss reduction)

Whether it’s original (not copied or replayed)

Whether it was submitted on time and in sync with the current model state

Payouts depend on performance, not just pass/fail checks. The system measures contribution by how much an update improves the model, and how that ranks against other miners. It’s competitive by design, and that drives quality.

Fraud prevention is just as critical. Each contributor is assigned unique data. Validators compare performance on that data and on noise. If an update performs well on random inputs but not the assigned task, it likely wasn’t computed honestly. The system can run fast heuristics or slower, deeper checks depending on the risk.

That said, gaming loss is real. Well-resourced adversaries will find weaknesses in optimistic or purely loss-based incentives. Overfitting, gradient-crafting attacks, and other edge cases can sneak past naive checks. Preventing this will likely require a layered approach: better validation, token staking and slashing, and eventually cryptographic tools like ZK proofs.

In May 2025, Templar trained a 1.2 billion parameter model that matched centralized baselines and converged faster during early training, especially between steps 1000 and 4000. It consistently held lower loss, hinting at better sample efficiency: possibly driven by the incentive structure, which rewards miners for meaningful improvements.

If these results hold, Templar could scale up to larger models and open the system to organizations that want to train models collaboratively.

The Decentralised Training Pipeline

To show how it all fits together, here’s what decentralized training looks like at a high level in practice:

A model initiator submits a training request to the network. This could involve uploading a dataset and model architecture, or defining a more complex setup, such as a reinforcement learning loop.

The coordination layer/scheduler splits the job into smaller tasks. These tasks are usually microbatches, rollouts, or parameter blocks optimized for real-world communication limits. The tasks are then assigned to available worker nodes.

As training progresses, validators verify the outputs. This doesn’t mean re-running every step. Instead, most systems use optimistic validation: outputs are accepted unless someone challenges them.

Model parameters are merged continuously across iterations. When training is complete, the finished model is delivered back to the requester or made available for deployment.

Each part of the process is incentivized with token rewards or micropayments. Correctness is enforced through cryptoeconomic mechanisms that penalize bad actors and reward honest work.

Still a Long Way from GPT-4

We’re not close to training something like GPT-4 on decentralized infrastructure. It will take more breakthroughs, perhaps years.

Techniques like DeMo and DiLoCo reduce bandwidth needs by shrinking the size of updates. But that shifts the burden to local compute. These optimizers don’t solve for latency, trust, fault recovery, or global consistency. And they haven’t been tested at frontier scale.

To put things in context, models like GPT-4 or Claude 3.7 are trained with:

10 to 100 trillion+ tokens

10 million+ H100 hours

Dozens of expert models or layers totalling 1–5T of parameters

Thousands of synchronized nodes running tensor and pipeline parallelism

Matching that with open, decentralized networks means solving more than bandwidth. You need to overcome coordination ceilings—jittery latency, unreliable hardware, untrusted actors, stale updates. The system must recover from failures and continue moving forward without collapsing.

What’s really needed is a new stack:

Architectures that allow for looser coupling across nodes

Protocols that align incentives and prove work

Compute topologies built around bandwidth as the bottleneck

Economic systems that remain stable even when some participants act adversarially

That said, the baseline has already moved. Models in the 10 to 40 billion parameter range are being trained across distributed networks today.

That alone would have sounded far-fetched just a year or two ago.

Surging Interest in Decentralized Training

Why does decentralized training matter? Because it changes who has the power to build the most capable models.

Right now, training foundational models is confined to a few major labs. OpenAI, Anthropic, Meta, and xAI have the budget, infrastructure, and talent to run massive training pipelines. Everyone else depends on their models. That includes startups, academic researchers, and entire national programs.

Demand for “Open Foundation” Models

Developers and open-source communities are looking for alternatives. Unstoppable models that can’t be throttled by rate limits or shut down by policy changes.

Decentralized training offers a path toward models that no single entity can control. There is no central API, and no single company has a kill switch. Anyone with the right hardware can participate. This also creates space for new kinds of economic models. Systems reward contributors with tokens based on how much they improve the network’s collective performance.

The fundamental idea is that if more people can access AI model training by lowering the barriers, progress will occur much more quickly.

Centralized Compute Is Hitting Physical Limits

Training ever-larger models inside centralized clusters is starting to hit physical constraints. Power, cooling, and land are no longer easy to scale. Supply chains for high-end chips are strained. There’s a ceiling, and we’re getting close to it.

The nature of decentralized protocols allows them to scale beyond traditional state and physical boundaries, enabling the most efficient and largest scale markets in the world for compute and intelligence. They can harness underused compute from all over the world. Even NVIDIA’s Jensen Huang has pointed to asynchronous, distributed training as a key direction for the future.

The Stack Is Maturing

A few years ago, decentralized training was still theoretical. That’s changed.

Nous has successfully trained a 15-billion parameter model across distributed hardware. Prime Intellect trained INTELLECT-2, a 32-billion parameter model spread across several continents. These are live, working systems.

Each successful training run raises the ceiling. It proves what’s possible and makes people believe. This draws in new developers and pulls more capital into the space. The infrastructure is still early, but it’s starting to hold.

Part II: Tokenization = Owning Your Own AI

“we collectively own the model we train.”.

Open weights ≠ free production.

The models that are “free” today were trained at significant cost, by well-capitalized labs like Meta, Mistral, or DeepSeek who have strategic incentives to release them.

The pipelines behind them are anything but open. If major labs stop releasing checkpoints—whether due to regulation, safety concerns, or shifting incentives—the open model ecosystem could stall. There would be no fallback, and no independent path forward.

Decentralized training offers a way to build models without relying on corporate backing, and to fund that work (which is highly capital-intensive) through shared incentives.

These trained models can be represented as on-chain tokens. Participants earn a share by contributing compute, supplying data, or verifying results. If the model is later monetized, revenues are distributed to contributors based on their stake.

This changes things:

Contributors provide compute, data, or expertise

They get ownership of the model.

If the model succeeds, they share in the upside

Monetization Approaches

Tokenization introduces new ways to fund and govern AI. A model can become a digital asset with tradable ownership. Developers can license access through tokens, researchers can monetize trained models without giving up control, and global participants can invest in the infrastructure itself.

This sets the stage for programmable markets around AI usage:

Usage or Subscription-based access. A model is deployed on a decentralized inference network. Users pay per request or via a monthly plan. Revenue is automatically split between compute providers and original contributors.

Pay-per-fine-tune. Organizations can customize a base model for specific needs by paying a fine-tuning fee. That payment is shared with those who helped train the original version.

Sponsored public models. Some networks may choose to release models for free, funded through token inflation, grants, or community-led treasuries, similar to how open-source software is maintained today.

In this framework, owning a model means more than having access to its weights. It means holding a financial and governance stake in how that model is used.

Governance could be handled by a DAO. Token holders would vote on key decisions:

Should the model be fully open or access-controlled?

What rules should govern its use?

How should future training or fine-tuning resources be allocated?

But Aren’t Models Just Commodities?

This comes up often in my personal conversations: if high-quality open models are already free, what’s the point of tokenizing a new one?

Meta, Mistral, DeepSeek, and Qwen continue to release strong checkpoints. Once weights are public, anyone with a GPU can fine-tune or quantize them in a weekend. That makes replication cheap.

So for a model token to hold real value, access alone isn’t enough. The model needs something others can’t easily reproduce. That usually comes from:

Proprietary datasets, such as legal or medical corpora that are not publicly available

Ongoing reinforcement, where the model keeps improving through active community contributions. The live model becomes harder to mirror over time.

Without those moats, models behave like commodities. They can be forked, reskinned, and rehosted. Their market value drifts towards the cost of inference.

This is why tokenization makes the most sense in specialized domains. Models trained on hard-to-source data (pharma, finance, law) are harder to replicate and more defensible. In those cases, the model becomes an actual asset.

But even with a unique model, there’s a deeper problem: keeping the model scarce after release.

If someone can piece together all the weights during training or inference, they can leak or rehost the full model. At that point, the token is just a wrapper. Scarcity is gone.

For token value to hold over time, two things need to be true:

No single participant can see the full, usable weights.

Inference and updates must stay tied to the network.

Pure data-parallel training violates (1), because every node has a copy of the full model. That’s why Pluralis is working on its Protocol Learning, a model-parallel approach that shards weights across nodes. No single actor can reconstruct the entire model, which makes tokenized ownership more viable.

There’s also an economic layer. If the model keeps improving on-network, any leaked snapshot quickly becomes outdated. Only contributors who stay connected benefit from ongoing progress. This dynamic creates a kind of soft moat around the live model.

If these technical moats don’t hold, then the value shifts. A “tokenized model” might still be worth something, but not for the model itself. Its value could come from:

Governance rights over the protocol that trains/improves models.

Access rights to the continuous improvement process for the model.

Speculative value

No one has yet built a fully functioning economic system around a tokenized model. Key questions remain: who captures value, how models are upgraded, and whether rights can be enforced in practice.

But mark our words, the experiments are coming. Probably sooner than we expect.

Our Viewpoints

Smaller Specialized Models is the Sweet Spot

We believe the more immediate opportunity for decentralized training lies in specialized, domain-specific models.

Training a frontier-scale model still demands hundreds of millions of dollars, massive compute clusters, and tightly optimized infrastructure. As long as decentralized systems remain even slightly less efficient than hyperscaler setups, reproducing these models will likely stay out of reach for now.

Mid-sized models—those in the 5B to 30B parameter range, and potentially 50B to 100B—are a different story. These can be trained on decentralized infrastructure quite soon.

They also come with strategic advantages:

Targeted datasets reduce the need for massive generalization and allow for more focused fine-tuning.

Moderate hardware is sufficient, which aligns with the types of machines available across decentralized networks.

Business models are clearer. These models can serve specific, high-value domains where performance translates directly into utility.

Imagine a decentralized network training a language model specialized in biomedical research. Contributors provide compute/data, receive tokenized ownership, and earn from future usage. That could take the form of API calls, subscriptions, or licensing deals, all without requiring traditional venture capital.

(Side note: if you’re building AI models in healthcare, DM me)

One example of this approach is Bagel, a team building infrastructure for collaborative training of domain-specific, monetizable models. Bagel is not trying to compete with frontier AI labs. Nor is it limited to lightweight fine-tuning. It operates in the middle: training models that are large enough to matter but focused enough to remain feasible.

To do this, Bagel is researching model architectures (beyond transformers) that embed domain-specific reasoning during training. The goal is to teach models not just what to know, but how to think within a specialized context. This is more than adapting outputs. It involves shaping the learning process itself, which opens the door to more reliable and defensible performance.

In short, mid-tier models offer a practical path forward. These models are more accessible to train, easier to deploy, and most importantly, easier to monetize.

There are always tradeoffs

Decentralized training forces a balance. You trade speed and predictability for openness and resilience.

Even if communication becomes more efficient and systems scale, cost remains an open question. These networks carry real overhead: slow synchronization, repeated validation, and unreliable hardware. Centralized clusters are optimized down to the wire. Decentralized setups are still figuring that out.

Security adds friction. Open networks invite attack. The surface is wider, the defenses more complex. You need guardrails against poisoned updates, sybil attacks, and freeloaders. Zero-knowledge proofs, optimistic checks, and staking help, but they introduce complexity. Some slow things down. Others shift costs to incentives or coordination. None are plug-and-play.

To make decentralized training viable, you have to solve for the full stack. That means:

Compressing gradients without killing the signal

Coordinating across unpredictable networks

Building trust mechanisms that work without a central authority

Progress will depend on better verification layers and model architectures that tolerate noise and drift across nodes.

We’ve painted a pretty heavy picture of the challenges ahead, but ultimately, decentralized training tackles problems that centralized systems just can’t:

Ownership, censorship-resistance, global reach, and open participation

The tradeoff is clear. The question is whether it’s worth it.

🚦 Quick Note on AI Safety and Ethics

Decentralized training brings us a dilemma: if anyone can help build powerful models, who makes sure they’re safe?

Some argue that openness makes systems easier to audit. When training runs are public, code is transparent, and checkpoints are on-chain, there is no hiding the process. Anyone can inspect what was done and how. There are no secret weights, no private patches, no closed-door decisions.

Others worry the opposite. Without a central authority to enforce policy or review outputs, critical safety steps like red-teaming or human oversight could disappear. Once powerful models are trained, there may be no one left to stop misuse.

This is the core tension.

Conclusion

Are we on the edge of something transformative with decentralized training, or still chasing a moonshot? Probably both.

We are realists, and well aware it’s not enough to argue from ideology. If decentralized AI is going to matter, it has to prove it can train models that are

cheaper,

faster,

and more adaptable.

Otherwise, it won’t matter in the long run.

The technical gap is still real. Public networks are slower, less predictable, and more fragile. Validation costs time. Trust comes with a price.

But the progress is real. Just a year ago, training multi-billion-parameter models across the public internet looked impossible. Now we’ve seen mid-size AI models trained with competitive results. The baseline keeps moving.

As models grow larger and centralized infrastructure hits its limits, the case for decentralized training gets stronger. It might start small. It might stay niche for a while. But when the cost curve bends, systems that look experimental can become standard faster than expected.

That is what makes this moment worth paying attention to.

Cheers,

Teng Yan

Team Summaries & Latest Progress

A focused group of teams is actively advancing decentralized training, each exploring different combinations of decentralization, compression methods and incentive design. Key players so far include Pluralis, Gensyn, Prime Intellect, Nous Research, Bagel, Templar, and Macrocosmos.

Of these, only Templar and Macrocosmos’ pretraining subnet currently offer liquid exposure through tradable Bittensor subnet tokens. The rest are early-stage ventures, accessible only via private investment.

Pluralis Research

Led by Alexander Long, Pluralis is a small team of ML PhDs focused on decentralized, open-source AI through a method called Protocol Learning.

They focus on low-bandwidth model-parallel training, where weights are sharded across nodes so no one sees the full model. This enables closed-weight models that can be owned and monetized, while keeping the benefits of open-source development.

In June 2025, Pluralis published new research on model parallelism, introducing a compression technique that makes decentralized training more communication-efficient. The method achieves:

Up to 100× communication reduction

Lossless reconstruction of both activations and gradients

No degradation in convergence, even at billion-parameter scale

Model training progress: No models are currently being trained publicly. In an experimental setting, Pluralis trained an 8B Llama model using a pipeline parallel setup across 64 GPUs distributed in 4 separate geographical regions.

Funding: $7.6M, led by USV and Coinfund

Gensyn

Gensyn aims to turn global idle compute into a massive open AI cluster. Their system supports both data-parallel and pipeline-parallel training. Their architecture is guided by six principles (GHOSTLY), ensuring low overhead, trustlessness, and support for heterogeneous hardware.

Some of Gensyn’s key innovations include:

Verde: node training verification in an efficient manner without re-running the entire compute

RL swarm: a peer-to-peer system where small models train via reinforcement learning and learn from each other. Each participant runs their own copy of the base model. Through interaction, they improve faster with fewer steps, like a study group, without the coordination overhead.

SkipPipe: faster pipeline training with built-in fault tolerance

Model training progress: Gensyn is currently in the testnet phase for its RL swarm, conducting a permissionless post-training of 0.5B to 72B models using reinforcement learning on a custom Ethereum rollup.

Funding: $43M Series A, led by a16z crypto

Prime Intellect

Prime Intellect is a full-stack decentralized AI platform led by Vincent Weisser and Johannes Hagemann, with $20M+ in funding from Founders Fund and Andrej Karpathy. It is building on Ethereum (Base).

Key components include a Compute Exchange, PRIME & PRIME-RL (training framework), GENESYS (synthetic data), TOPLOC (verifiable compute) and PCCL (helps computers communicate even in messy environments when machines drop in and out)

Model training progress: INTELLECT-2, a 32B parameter model, was recently fine-tuned (not trained from scratch) on top of the Qwen base using globally distributed reinforcement learning. The fine-tuning dataset, INTELLECT-2-RL, focused on math and coding tasks. The result showed clear gains over the base model, QwQ-32B, especially in those domains.

Funding: $20M total, including Founders Fund and Menlo Ventures

Nous Research

Nous is a community-rooted AI R&D collective focused on making distributed training viable on consumer hardware. Their training architecture includes DisTrO and DeMo optimizers, reducing communication overhead by up to 10,000x. In 2024, they trained a 15B-parameter model using this approach.

Model training progress: Nous is building Psyche, a decentralized training network on Solana. The first run, pretraining of a new 40B parameter base model using a dense version of DeepSeek’s model architecture and 22T tokens, commenced in mid-May 2025. You can watch its progress here

Funding: $70M total, led by Paradigm and including Delphi Digital, Distributed Global, North Island Ventures

Templar (Subnet 3)

Templar is a Bittensor subnet focused on pretraining large language models. Its core idea is simple: prioritize incentive design over architecture. The system uses a decentralized network of independent nodes (miners and validators)

Miners train locally, compress their updates into pseudo-gradients, and submit them to the network. Validators score each submission through the Gauntlet, a mechanism that rewards updates based on actual model improvement. Since rewards are tied to performance, miners are free to experiment and optimize their own training methods.

Model training progress: In May 2025, Templar completed a 1.2B-parameter fresh base model trained on the FineWebEdu corpus, a 2T token dataset positioned as a cleaner alternative to the Common Crawl. The model outperforms others at a similar scale, although it still lags behind much larger models in overall capability. Full paper here.

It is now training an 8B parameter model.

Funding: No VC funding, funded on Bittensor

Macrocosmos’ Pretraining (Subnet 9)

Just last week, Macrocosmos announced IOTA—short for Incentivized Orchestrated Training Architecture. It’s their decentralized training system for scaling large models across a distributed network of miners.

IOTA is an incentivized pipeline-parallelism setup. Instead of each node holding the full model, miners are assigned one or more sequential layers. This sidesteps the usual memory bottlenecks of data parallelism. Model size scales with the number of participants, not the VRAM per node. It also makes training possible on hobbyist-grade GPUs. If a node goes down, SWARM routing steps in and dynamically reroutes around it.

A few novel pieces stood out:

A custom bottleneck transformer block, adapted from LLaMA 3, compresses activations and gradients by up to 128×. It uses partial residuals to keep gradients flowing, even under heavy compression.

Synchronization runs through a modified Butterfly All-Reduce. It has O(1) communication per miner instead of O(N), which means each miner only sends and receives a fixed amount of data, regardless of how many miners are in the network, and allows miners to cross-check shards.

Incentives are handled by CLASP, a Shapley-inspired scoring system that looks at how much each miner’s output actually changes the loss. If a miner is free-riding or submitting junk, CLASP surfaces it.

Funding: No VC funding, funded on Bittensor

Model training progress: It is currently training a 15B model using IOTA, started on 2 Jun 2025. There are no production results yet.

This essay is intended solely for educational purposes and does not constitute financial advice. It is not an endorsement to buy or sell assets or make financial decisions. Always conduct your own research and exercise caution when making investments.