we’re sharing our mental frameworks that guide how we think about AI and crypto. It’s a messy space, and we’ve found these models useful for cutting through the noise. Hopefully, they give you a clearer lens too.

If this sparks something, share it on X or forward this on to a friend.

We’re early.

Not just in terms of market cap or developer adoption, but in how Crypto and AI actually understand and relate to each other.

Crypto spent the last decade building trustless systems that don’t rely on central coordination. AI, meanwhile, is absorbing data, learning patterns, and increasingly making decisions that used to belong to people. On their own, each is disruptive.

Together, their collision introduces second-order effects: emergent behaviors, new coordination models, and also a fair amount of chaos.

New categories emerge. Old assumptions break.

To stay oriented, we’ve been using a few simple mental models. Not to predict the future, but to track what’s working, what’s noise, and where the strongest signals are emerging.

We wanted to share them with you, in case they help you do the same.

Framework #1: Crypto ←→ AI

At the intersection of AI and crypto, two primary forces emerge:

AI makes crypto easier to use: Intelligent agents and “chatGPT” interfaces remove friction from on-chain interactions. Users no longer need to understand wallets, seed phrases, or on-chain tooling to participate.

Crypto strengthens AI’s integrity: It anchors decisions in transparent systems. Verifiable data, public infrastructure, and open coordination mechanisms create boundaries for otherwise opaque models.

Most startups tilt toward solving a problem in one category.

AI Improving Crypto

Crypto has always had a UX problem. AI is now actively solving it. We’re seeing early momentum in three areas:

Trading agents

The volatility and fragmented nature of crypto markets make them fertile ground for AI-driven strategies. Agents can ingest real-time data, adapt to changing conditions, and uncover patterns at a scale that humans can’t match.

Security and Threat Detection

Agents can monitor blockchain activity in real time, flagging anomalies like phishing attacks and smart contract exploits. This adds a real-time defensive layer that evolves as new threats emerge.

Developer co-pilots

Smart contract tooling is getting faster and more reliable. Language models can now write, audit, and optimize Solidity code. The effect is compounding: lower bug rates, faster deployments, higher developer velocity.

Archetype | Primary value delivered | Revenue model |

|---|---|---|

Trading agents | Capture price inefficiencies | PnL on own capital; fees in managed setups |

Security monitors | Reduce loss events and downtime for protocols | Subscription or usage-based API pricing |

Developer co-pilots | Improve smart contract quality and velocity | SaaS licenses or token-based pricing |

On the user side, AI assistants like Wayfinder, Giza, Fungi, and Orbit help users swap tokens, find optimal yields, or automate on-chain decisions. These tools lower the barrier to entry and make crypto usable for broader audiences.

The pattern is familiar. Complexity gets abstracted away. Power users benefit first. Then the rest of the market follows.

Zoom out far enough and we’ll start to see autonomous agents interacting with smart contracts. Value flowing machine-to-machine. Markets clearing without human input.

The direction is clear. AI is rapidly becoming foundational to crypto’s next phase.

Crypto Improving AI

AI is accelerating quickly. However, as models become more powerful and increasingly autonomous, core questions that once felt theoretical are coming into focus.

Who owns the data? Can we trust the outputs? What happens when no one’s in the loop?

Crypto offers a set of primitives built to answer those questions:

AI verification and model provenance

One of the biggest open problems in AI is proving that a model gave the right output for the right reason. This becomes even harder in systems where there is no central operator to enforce trust.

Crypto-native approaches are starting to fill that gap. Zero-knowledge circuits can verify that a model ran on specific inputs without revealing the data. Attestation systems compare outputs across multiple nodes to confirm integrity.

Privacy-Preserving AI

Protocols like Nillion and Atoma enable computation on encrypted data, keeping sensitive data protected during training and inference. This allows models to run on private data without ever exposing it.

Decentralized AI Networks and Marketplaces

Instead of relying on centralized labs to build and control models, new protocols coordinate training across networks of independent contributors. Data providers, compute operators, and model trainers are all compensated on-chain. Ownership and control becomes shared.

This is more than a design choice. As models grow and training costs spike, tapping into idle GPUs from small data centers or individual contributors becomes a practical requirement

The Bigger Bet: Crypto as AI Infrastructure

🧠 Our Core Idea

We believe the larger and more durable opportunity is where crypto becomes essential infrastructure for AI itself.

With the AI market projected to reach $1.8 trillion by 2030, even a 5% share would represent a $60 billion opportunity. That’s enough room for entirely new product categories—verifiable inference networks, decentralized model registries, tokenized data exchanges.

Investors are starting to lean in. By mid-2024, crypto AI startups had attracted serious capital. One eye-popping example: Sentient alone raised $85 million in a seed round led by Founders Fund & Pantera, underscoring the growing belief that blockchain can help address some of AI’s hardest infrastructure challenges.

As AI systems grow more powerful and autonomous, questions around trust, provenance, and control are becoming unavoidable. Blockchain offers a foundation built for exactly that.

Even Binance’s CZ agrees with us. So.. we’re probably on to something.

Framework #2: Tokens are the Superpower

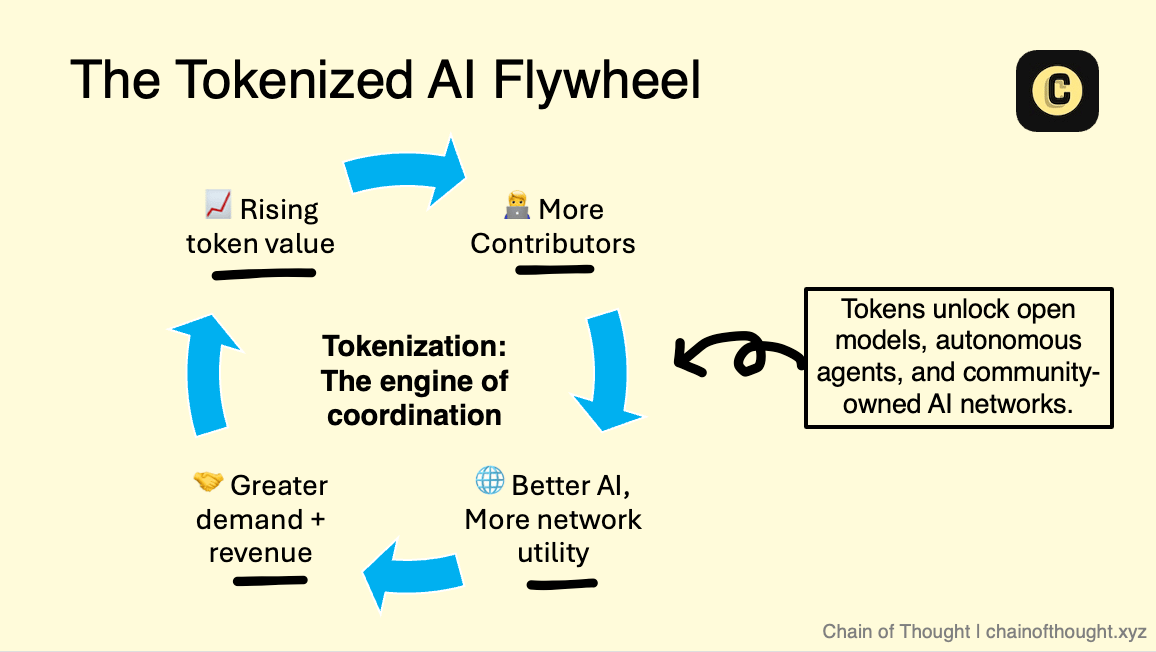

When token design is done well, the flywheel spins fast:

It bootstraps adoption by rewarding early contributors (data and compute providers, model builders) with tokens that grow in value as the network scales.

It aligns incentives. Users, developers, and investors all benefit from network expansion. No single party extracts at the expense of the others.

It sustains long-term participation by embedding governance directly into the protocol. Contributors steer the future.

Why Tokenization Matters for AI

🧠 Our Core Idea

Teams building in Crypto AI should lean heavily into tokens as a core innovation, and use them as a native engine for growth and distribution of their products.

Token design sits at the heart of any credible Crypto AI product. A well-structured token can fund development and coordinate open participation in ways that no traditional model can match.

Think about decentralized GPU networks pulling idle compute from around the world. Training large models becomes cheaper and more accessible. They can issue tokens to access compute and bootstrap participation from day one.

Or take OpenLedger as an example. Data providers contribute datasets. Users train the models. Developers pay to deploy them in apps. Instead of value being captured by a single platform, it’s shared across the network. Contributors benefit directly from the system they help build.

As participation grows, so does the network’s value. Every new contributor adds strength, scale, and resilience.

Get it wrong, though, and the outcome is very different. Poorly designed tokens open the door to regulatory risk and attract short-term speculation that extracts value instead of creating it.

New Business Models

The real opportunity lies in new business models that weren’t feasible before.

With tokenization: Data, compute, and human contributions become on-chain assets.

→ Rapid Capital Formation

Tokenized AI projects can raise capital directly from their communities. They don’t need to depend on venture capital. This allows them to build and launch in edge areas that VCs cannot fund.

→ Machine-to-Machine Economies

Tokens allow AI agents to transact without human input. Inference, storage, and data access can all be priced and settled automatically. This unlocks microtransactions at scale between machines.

→ Tokenized AI Models and Intellectual Property

As open-source AI matures, tokenization gives communities a way to fund, govern, and evolve models together. Contributions are tracked. Updates are voted on. Value is shared by those who make the system better.

This is a structural shift. Traditional AI runs on closed endpoints and corporate APIs. Tokenized AI runs on open infrastructure, shaped by the people who use it. S

ystems that distribute ownership from the start are harder to kill, easier to improve, and better aligned with the people they serve.

Framework #3: The Utility–Attention–Trust Triangle

Every Crypto AI network lives or dies by three core forces: utility, attention, and trust. You can think of them as the sides of a triangle.

They are interdependent. Remove one, and the system loses coherence. Keep all three in sync, and momentum compounds.

Utility is the Foundation

Without utility, everything else is temporary. It means the product is solving a real problem and delivering value that stands on its own.

In practice, this can look like:

Paid API calls or inference jobs

GPU hours rented on a decentralized compute network

Queries handled in a data marketplace

Real integrations into working apps

You know utility is working when usage continues without rewards and/or when revenue grows steadily.

Attention is the Accelerator

When something works, it gets noticed. Attention brings new users, developers, and capital.

Healthy attention shows up in:

Rising search interest and organic mentions

Active developer engagement

Exchange liquidity driven by actual demand

Credible voices discussing the project for what it’s doing, not just where the price is going

But attention without substance is dangerous. We all know of projects that spike on social media before they’ve shipped anything meaningful. Token prices pump for a while, and then it all eventually collapses.

The inverse is a quieter failure. When the product is working, but no one hears about it, adoption stalls. That’s especially dangerous in tokenized systems, where attention is part of the mechanism that drives demand, liquidity, and feedback.

A product that can’t tell its story risks never compounding at all.

Trust is the Glue

Trust holds the system together. It turns first-time users into long-term contributors. It gives developers a reason to build, node operators a reason to stay online, and capital a reason to stick around when markets cool off.

In Crypto AI, trust carries extra weight, just because the systems are open and there are financial stakes involved. Without trust, nothing scales.

This tends to fall into three categories:

Technical trust: open-source code, clear documentation, public audits

Economic trust: sustainable token design, visible treasury, transparent team funding

Social trust: a team that communicates clearly and ships consistently

Even with strong utility and attention, a trust gap slows everything down. Communities get anxious. Developers leave. The feedback loop weakens.

But when trust holds, it reinforces the rest. Utility creates value. That value draws attention. Attention brings scrutiny. Scrutiny demands transparency. Transparency builds trust. And trust brings more contributors who improve the product.

This loop is what makes networks compound.

When the triangle slips out of balance, it will be obvious to anyone watching it.

🧠 Our Core Idea

The most resilient Crypto AI projects earn their place by keeping the triangle in balance over time.

If utility is weak, improve the product. If attention is fading, share real progress and tell great stories about why your product is awesome. If trust is in question, clarify governance and show transparency. Find the weak side and reinforce it.

Framework #4: The Best Opportunity No One’s Paying Attention To

The biggest shifts in tech rarely come from isolated breakthroughs.

They start when two forces converge, each compounding the other’s impact.

Crypto AI sits at one of those intersections. On one side, infrastructure for trustless coordination. On the other, increasingly autonomous systems that need structure, access, and governance.

Together, they unlock new coordination models, new distribution paths, and entirely new product surfaces.

But convergence alone doesn’t create lasting value. Scale is what turns a narrow wedge into lasting infrastructure.

Amazon understood this. What began as an “online bookstore” became something else entirely when it leaned into infrastructure and built AWS. Now AWS is Amazon’s most profitable segment and accounts for the majority of its operating income.

Crypto AI is at a similar moment. The core primitives exist. The market is wide open. But there’s still not much weight behind it. A handful of builders. Some early protocols.

So far, most of the capital has come from crypto-native VCs, liquid funds, and retail participants. Web2 investors have largely stayed out.

That is beginning to shift:

Union Square Ventures backed Pluralis Research in March 2025 ($7.6M seed round)

Founders Fund and Menlo Ventures entered the space via Prime Intellect ($15M round)

As general AI markets grow more crowded, top-tier VCs are beginning to look for edge. Crypto AI is becoming a natural frontier.

Crypto AI startups raised $213M in Q3 2024. That’s almost double year-over-year. Still, it’s just a fraction of the $100 billion that flowed into general AI during the same window.

The gap is real. And it won’t stay open for long.

Timing the Crypto AI Wave

Most new technologies follow a familiar pattern: breakout innovation sparks excitement, hype surges, expectations overshoot reality, and eventually, after a reset, real-world adoption emerges. The Gartner Hype Cycle remains a popular way to understand this curve.

The best entry points for founders and investors tend to fall in two places:

At the innovation trigger, before mainstream hype sets in

Or during the trough of disillusionment, when projects are quietly building beneath the noise

So, taking reference from Gartner, where are we in this cycle for Crypto AI?

Some believe the cycle already peaked. We don’t.

Back in 2024, we argued that Crypto AI hadn’t entered a true mania phase.

That still holds. The spike between November and January 2024, driven by AI agents and memecoin-style trading, was just one of many mini-cycles that will play out across the sector.

Each Crypto AI subsector will have its own arc of hype, disillusionment, and rediscovery, at different timings:

Decentralized training of AI models

Robotics

AI-native L1s

Verification infrastructure

Tokenized data networks

Some are in early testnet stages. Others haven’t launched tokens yet. What they share is unfinished infrastructure and undervalued surface area.

This lag is important. Skepticism remains high, even among experienced investors. Most outside of crypto (and even many within) struggle to explain what Bittensor actually does, let alone why it matters. That disconnect is the signal. It shows how early this still is.

The real inflection point will come when crypto-native primitives become standard tools in the broader AI stack. That moment is likely still one to two years away. Your goal should be to position yourself ahead of this.

🧠 Our Core Idea

The market is still underpricing the scale and inevitability of what’s coming.

Investors who stay close to the frontier and track developments as they unfold will be best positioned to capture the upside when it happens.

Why There’s Still Massive Upside

Let’s ground it in numbers. According to Coingecko:

Crypto AI tokens ($32B) represent just 2.2% of the total altcoin market cap ($1.4T).

During the AI agent mania, the total market cap for AI agents only hit $17B, and we are <50% of that today.

For comparison, Dogecoin peaked at $66B and still trades above a $34B market cap today.

Even at its high point, Crypto AI barely moved the needle. That’s the opportunity.

If a meme coin with no underlying utility can command tens of billions in value, what happens when an entire category built on working infrastructure, active teams, and useful applications starts to scale?

Worth thinking about.

Conclusion

Crypto AI is not just a narrative. It’s a structural shift.

It’s early. It’s messy. That’s what makes it interesting.

The frameworks we’ve presented here—AI<>Crypto flow, token design, the utility-attention-trust triangle, timing—are not your answers, but your tools. Use them to cut through noise, spot where real traction is building, and position yourself.

This convergence is moving faster than most people realize. Stay close to it.

Cheers,

Teng Yan

Share your take or a quick summary on X. Tag @cot_research (or me) and we’ll repost it.

P.S. Liked this? Hit Subscribe to get the next “Big Idea for 2025” before anyone else.

This essay is intended solely for educational purposes and does not constitute financial advice. It is not an endorsement to buy or sell assets or make financial decisions. Always conduct your own research and exercise caution when making investments.