Jan Liphardt wasn’t trying to make a scene.

It was a sunny afternoon in Palo Alto. He took his kids for a walk. Standard dad stuff.

Except they were joined by a humanoid robot and three robotic dogs.

People stopped. Phones came out. A neighbor asked if he was nervous walking around with machines that looked like sci-fi villains. Liphardt just smiled. “They download their rules from Ethereum every morning,” he said. “You can audit them yourself.”

Soon TM

I love this moment, because it captures something uncanny: the ordinariness of something that we’ve always thought of as “Sci-Fi”.

Jan, a Stanford professor, is building the coordination layer that could make it all work. He’s the founder of OpenMind, an open-source operating system for robots, designed to do for machines what Android did for phones. A common interface, a shared protocol, and a way to make distributed intelligence trustworthy at scale.

When I spoke with Jan, he impressed on me that the tech is moving much more quickly than most people know, and that it is the business models that are lagging behind. The robots are a preview of where we’re heading: not just as viral YouTube clips, but as everyday presences in our homes, schools, and streets.

The Future Isn’t Far Off

That Palo Alto sidewalk scene may feel like an outlier, but look at San Francisco.

Self-driving Waymos have quietly captured a quarter of the city’s rideshare market in just twenty months.

Think about that. In less than two years, fully autonomous vehicles went from 0% → 27% of rides in one of the busiest cities in the world. It’s no longer a beta test. That’s a commercial product at scale.

What Waymo proves is simple: autonomous machines are already competing with, and in some cases, out-competing, their human counterparts.

In our earlier robotics essay, The Machine Economy Thesis, we wrote:

Humanoid robots feel a lot like electric vehicles did in 2013. Expensive, limited in capability, and still niche. The same cycle is likely to play out. Costs will fall. Performance will improve faster than expected. And at some point, a product will arrive that captures public imagination: a “ChatGPT moment” that triggers a rapid shift in adoption.

But there’s a catch. Waymos only coordinate with other Waymos. Put one next to a delivery robot or a humanoid assistant, and the systems can’t talk.

The Isolation Problem

Every robot today is a loner. Heavy on the introvert scale (just like me).

You can spend $100,000 on a Boston Dynamics Spot, and it still won’t know how to open a door for a Figure humanoid. A Waymo can navigate downtown San Francisco, but it can’t sync with the delivery bot waiting at your curb. Each machine runs its own operating system, speaks its own dialect, and follows its own rules.

You may think this is a hardware problem. Nope. It’s a protocol problem.

It’s the same bottleneck the internet hit in the 1970s. Computers were powerful, but trapped in private networks. Until TCP/IP stitched them together, you couldn’t send an email or load a webpage across systems.

Robotics is stuck in that same pre-TCP/IP moment. The coordination failure shows up in three places:

No Common Language: Buy ten robots from ten vendors, and none will talk to each other. It’s like trying to run a company where every employee speaks a different language and refuses to learn yours.

No Shared Learning: Robots make the same mistakes over and over. A vacuum might figure out how to avoid a tricky corner, but that insight never reaches the humanoid assistant in the same house.

No Verified Identity: In open spaces (hospitals, factories, schools), you have no idea what a machine is, who made it, or whether it’s following the rules. That’s a security nightmare.

We call this the isolation problem. Unless these coordination gaps are solved before robots hit critical mass, chaos is inevitable. Machines will stumble over each other in shared spaces and duplicate work.

AI Agents Have the Same Problem

If this sounds familiar, it should. We’ve seen the same story play out in software.

When AI agents first appeared, they were impressive as solo acts. Trading tokens, running campaigns, managing wallets. But the real unlock came when they started working in teams. Suddenly, you had swarms of agents self-assembling into roles: one scouting data, another executing trades, another managing treasury. It started looking less like a chatbot and more like a startup forming on-chain.

We’re still in the early days of agent swarms, but the lesson is obvious: autonomy without coordination doesn’t scale.

Agents need shared protocols for identity, messaging, memory, and payments. Otherwise, the system collapses into noise. That’s why so much of the AI agent stack today is focused on coordination infrastructure.

I realize that for AI agents to be truly useful, they have to:

Recognize each other (identity)

Learn from each other (shared memory and messaging)

Pay each other and other entities (on-chain wallets, autonomous transactions)

And that’s exactly what startups and tech companies have been building.

Robots are the physical extension of that same story arc.

They’re embodied agents. The difference is that failure here is not just a bad trade. It means a car accident, a delivery bot blocking a fire exit, or a humanoid wandering the wrong hospital ward. So if digital agents need a coordination layer, physical agents need it….even more.

So that’s the layer OpenMind is building

The Missing Layer in Robotics

Many robotics software startups I’ve looked at (BitRobot, PrismaX, Reborn, etc) are tackling the data bottleneck with robotics. A huge problem and opportunity. What first struck me about OpenMind was its desire to take a very different path.

Instead of just chasing better data, OpenMind is going after the isolation and trust problem by creating a common software layer for intelligent machines.

It’s building two connected platforms:

OM1 → the universal, AI-native operating system that gives any robot a brain. Perception, reasoning, and adaptive behaviour.

FABRIC → the open coordination and settlement layer that lets robots prove identity, share knowledge, and transact with each other securely.

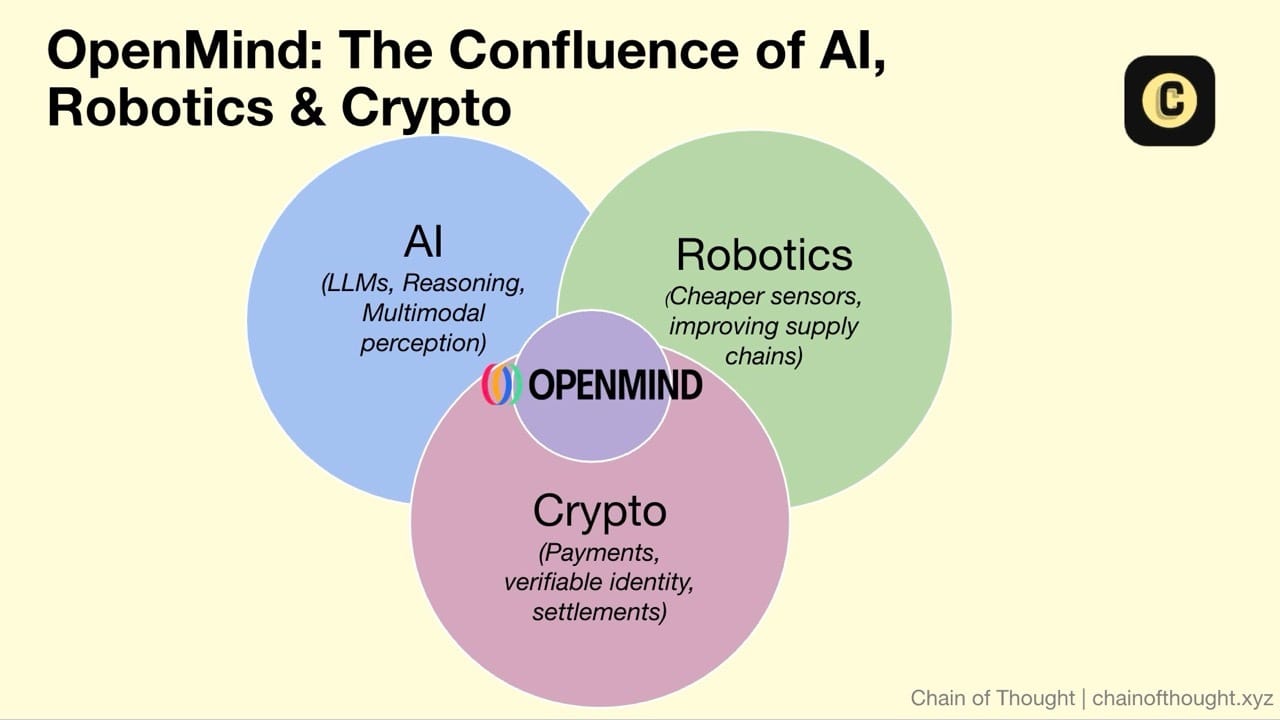

Together, they do for machines what Android + TCP/IP did for phones and computers: turn disconnected devices into an ecosystem. And the timing couldn’t be better. We are sitting at the convergence of three massive technology curves:

AI now gives robots language and logic. LLMs can interpret tasks, plan sequences, and even debug mechanical failure in real time.

Hardware is finally catching up. Costs are dropping. Actuators are getting smarter. Supply chains are maturing.

Crypto adds the missing trust layer. Verifiable identity. Shared state. Autonomous payments. All on neutral rails.

Individually, each of these trends is powerful. But stitched together, they unlock something new: robots that can reason, collaborate, and coordinate at scale.

Not just one smart machine, but millions of them acting in sync.

Not just smarter robots. Networked ones.

Under the Hood

#1: OM1, The Universal Robot Brain

OM1 is built on a simple but contrarian bet: the future of robotics won’t be won by vertically integrated stacks. It’ll be won by the layer that connects them.

OM1 doesn’t care what hardware you use. It is designed as a universal operating system that runs on any robot, be it a quadruped, humanoid, or TurtleBot. Plug it in, and the machine gets a shared “brain” for perception, reasoning, and action.

OpenMind is not trying to build a single, monolithic robotics foundation model. Thank goodness.

That game is already crowded, with new models shipping every few months, each with sharper reasoning and multimodal awareness. Competing head-on would be a losing battle. Instead, OM1 leans on the fact that LLMs already “speak robotics.” They can generate code, plan sequences, and parse instructions. What they lack are senses and a body.

For a typical humanoid, they use 10-15 different LLMs working simultaneously, living close to the sensors, and convert sensor data into captions.

Jan puts it simply: LLMs are like the brain of a blind person. They can imagine, reason, and plan, but they can’t see or move. Robotics provides the eyes, ears, and limbs. Natural language becomes the bridge, turning perception into action with nothing more than a paragraph prompt.

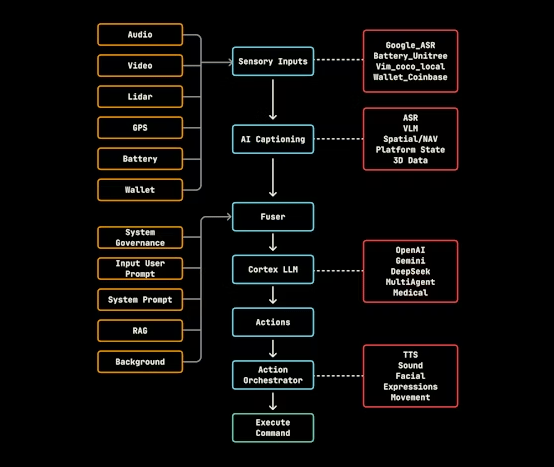

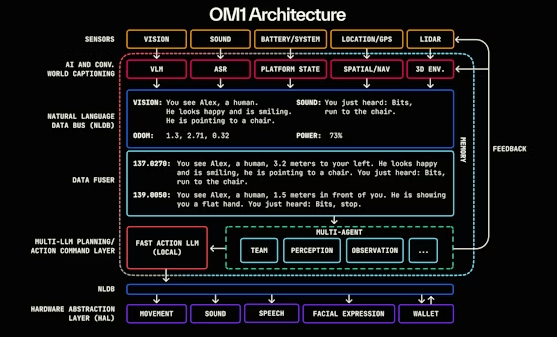

Here’s how OM1 stitches it all together:

Source: OM1 Docs

The robot starts by sensing the world. Cameras, mics, GPS, LIDAR, battery levels, all the raw input streams you’d expect.

Then OM1 does something clever. Instead of pushing those inputs straight into code, it runs them through captioning models.

Vision becomes a legible sentence: “You see a man smiling and pointing at a chair.” Audio becomes another: “You just heard: Teng (you), run to the chair.”

These sentences flow into a shared channel called the Natural Language Data Bus. It’s like Slack for robots. Every sensor posts its updates in plain English. A fuser then reads the whole stream and compresses it into a single paragraph. A situational snapshot. Something like:

“3.2 meters left: a smiling human pointing at a chair. You heard: Teng, run to the chair. Battery at 73%.”

That paragraph becomes the robot’s reality. Its context block.

Now comes the reasoning. The context goes into a cortex of LLMs, each playing a specific role:

A Fast Action LLM for split-second reflexes (token response in ~300ms).

A Core Cognition LLM for deeper reasoning and multi-step planning (response in ~2s).

A Coach/Mentor LLM that critiques behaviour every 30 seconds, giving feedback and course corrections.

This mimics human thinking: reflex, cognition, reflection. And importantly, these LLMs aren’t freelancing. Their guardrails like “never cross on red” or “don’t enter restricted zones” are pulled straight from immutable smart contracts on Ethereum. Anyone can check the rules a robot is running on.

Once the plan is set, OM1 translates it into action. High-level intent becomes low-level motor commands through a hardware abstraction layer, which maps decisions to whatever motors, speakers, or actuators a robot has. “Pick up the red apple” becomes a precise set of servo movements.

Then the robot executes. It moves, speaks, gestures, completing the full loop.

Sense→ Caption→ Fuse→ Reason→ Act. Repeat. Dozens of times per second.

The brilliance of OM1 is that the entire pipeline runs on natural language. The machine’s thoughts are legible. You can read them. You can audit them. And more importantly, other machines can too. It’s a shared language layer for physical intelligence.

Source: OM1 Docs

Why Natural Language?

It sounds counterintuitive.

If you peeked inside a robot’s brain, you’d expect to find math: vectors, tensors, sensor streams. Not English paragraphs.

But Jan Liphardt and the OpenMind team came to a different conclusion. In their 2024 paper, A Paragraph is All It Takes, they argued that you don’t need giant end-to-end models mapping pixels directly to actions. Rich behavior starts to emerge when robots share paragraphs.

A few well-formed sentences can compress everything a machine knows, like what it sees, hears, and plans to do, into a form that’s legible and portable.

That design choice unlocks three superpowers:

Observability: Because reasoning is expressed in plain English, humans can literally read a robot’s “thought process.” Debugging becomes as simple as reading a transcript.

Modularity: Any LLM can be swapped in or out. OM1 treats them as reasoning engines behind a common interface, so the system evolves alongside the model ecosystem.

Guardrails: Rules like “never cross the street without a signal” can be written in natural language and immutably stored on Ethereum. Anyone can inspect them. You don’t need to trust the robot. You can verify the rules it runs on.

But the real unlock is how robots can use language to teach each other.

One robot’s situational awareness (“You see a red light ahead; stop at the curb”) can be handed off as a paragraph to another robot, transferring context and learning instantly without retraining.

It’s like robots whispering to each other in class. One figures something out, scribbles it down, and suddenly the whole group is smarter. I wish my study group back in those old school days had worked that way…

The tradeoff is efficiency. Language is slower than machine-native protocols but the upside is transparency, portability, and collective learning. Natural language becomes the lingua franca not only for humans to talk to robots, but for robots to teach each other.

The Unifying Layer

OM1 is going for the Android moment in robotics.

Before Android, every phone maker built its own software stack. Apps were fractured and compatibility was a nightmare. Android abstracted away hardware differences, gave developers a single platform, and unleashed an ecosystem. Suddenly, anyone could write once and deploy everywhere. Hardware makers stopped writing OSs and focused on design.

The results speak for themselves. Android now powers ~73.9% of the global smartphone OS market, around 3.6 billion active devices. It supports an app economy already worth over $250 billion annually, with projections topping $600 billion by 2030. That’s what happens when you unify the cognition layer and open the floodgates for developers.

Right now, robotics is stuck in the pre-Android phase.

Every robot vendor builds their own stack. Developers re-implement autonomy for every platform. The robot operating system market is still tiny: ~$670 million in 2025, despite a robotics integration market already exceeding $74 billion. It’s a field full of powerful hardware, all running in silos.

OM1 changes the model.

One OS. Any robot. It abstracts away hardware specifics and provides a shared interface for perception, reasoning, and action. You don’t build for “a humanoid” or “a quadruped.” You build for OM1, and the machine learns what to do.

#2 — FABRIC: The Nervous System of Machines

To solve the problem of trust, you need more than cognition. You need a coordination layer.

That’s what FABRIC provides: a decentralized network that gives robots verifiable identity, programmable rules, secure communication, and native settlement rails. It’s the glue that makes robots smart, reliable participants in the real world.

Of course, most machine-to-machine communication happens off-chain. Blockchains aren’t built for low-latency or high-volume data like video or sensor streams. FABRIC uses them for the parts that matter: identity, governance, permissioning, and payment.

Identity & Constitutions

Every robot running FABRIC gets a cryptographic ID, anchored to an ERC‑7777-style identity token. That means when two machines meet, say a delivery drone and a van, they can mutually authenticate: who are you, and where are you right now?

But the more powerful idea is the constitution: i.e., auditable rulesets stored on-chain. Just like Jan’s robot dogs that pull their behavioural guardrails from Ethereum each morning, FABRIC lets any stakeholder check: what instructions is this machine actually running?

It’s similar in spirit to Google’s Gemini Robotics, which bakes in Asimov-style rules at the model level, or to what Anthropic calls “constitutional AI.” OpenMind takes a more transparent path. The rules are written in Ethereum contracts.

Communication & Knowledge Sharing

Once two robots know who they are, they need a way to talk.

FABRIC spins up encrypted, peer-to-peer tunnels on demand, like disposable VPNs between machines. A delivery drone can pass a hazard warning to a sidewalk bot. A warehouse rover can share a floor map with a visiting quadruped. But this isn’t open access.

Every exchange is governed by permission contracts: who’s allowed to send what, for how long, and under what conditions. One bot might share battery status but not location. A hospital assistant might send a fall alert without disclosing patient identity. That balance, sharing without leaking, is how you scale collaboration while keeping safety and IP intact.

Task Coordination & Marketplace

FABRIC also has plans to function as a marketplace for robot labour. Jobs can be posted (“inspect shelf X,” “deliver to door Y”), and nearby robots bid or accept; the protocol then assigns and verifies the work. Proof-of-completion automatically triggers payment.

If a humanoid from Company A hires a drone from Company B, FABRIC logs the task, verifies completion, and transfers stablecoins, all without human paperwork. Liability and insurance can even be enforced through smart contracts.

Global Knowledge Network

If I zoom out far enough, I can imagine FABRIC becoming a global skill library.

A humanoid in Seoul learns to fold towels efficiently. That skill gets packaged, signed, and uploaded. Any compatible robot worldwide can download it, adapt it, and execute. Contributors get credited and rewarded. The network gets smarter with each deployment.

It’s the app store for embodied AI, except instead of Angry Birds, you’re pulling down forklift maneuvers or caregiving protocols.

The Human Layer

FABRIC doesn’t just connect machines. It brings humans into the loop.

The network is structured around four roles:

Robot Owners register machines, build reputation, and earn from deployments.

End-users request services like delivery, inspection, or cleaning.

Tele-Operators jump in when autonomy fails, guiding robots through edge cases and earning fees in stablecoins.

Validators / Infra Providers verify identity, location, and tasks, securing the system.

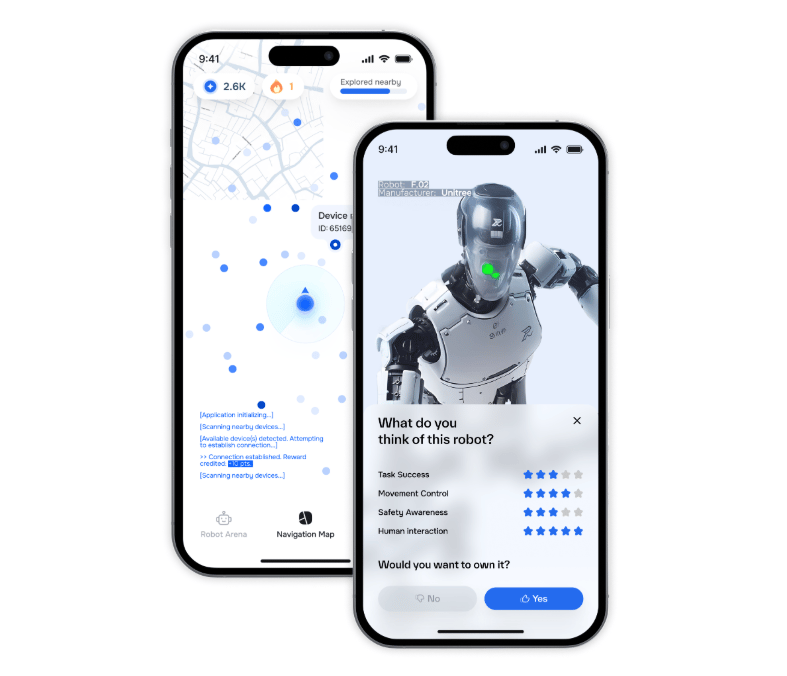

OpenMind has launched an app to tie these roles together. It looks like early Uber: users can request a service, register a machine, or plug in as a remote operator. In its first two weeks, over 500,000 people joined the waitlist.

The app is the interface. FABRIC is the infrastructure underneath, the part that makes every interaction verifiable, auditable, and scalable.

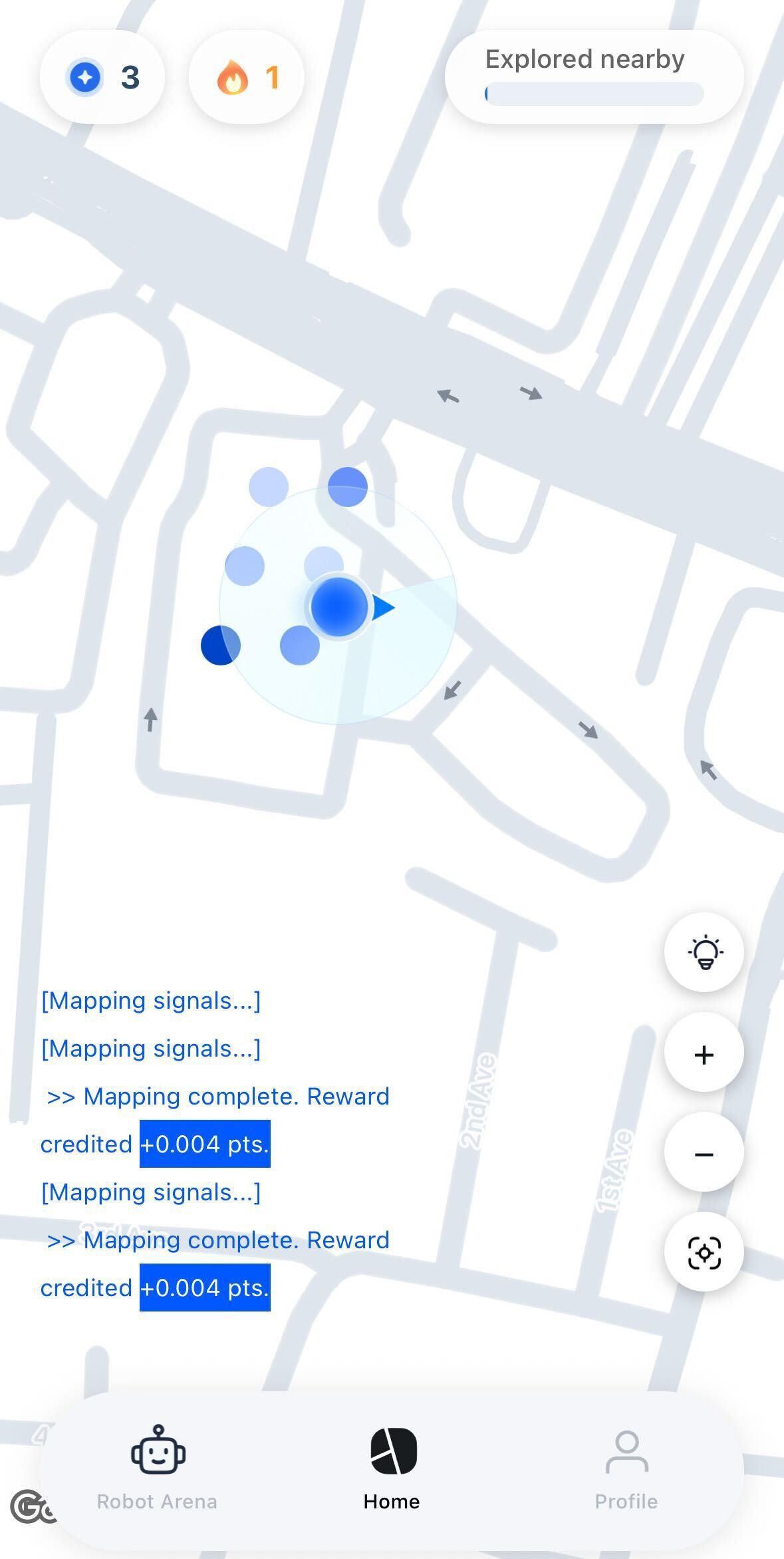

I got access to the app to see what it actually feels like. It’s still raw, but you can glimpse the mechanics of how FABRIC will pull humans into the loop.

Right now, you can mainly do two things on the app:

Map Location: Walk around with the app, scan your surroundings, and you’re essentially creating spatial datasets for robots. It’s a clever way to crowdsource the maps and edge cases robots will eventually rely on. And yes, you earn points while you’re at it. A good excuse to get your steps in.

Mapping location on the OpenMind app

Video Evaluation of Robots: Think of it like rating TikTok clips, but instead of dances, you’re watching quadrupeds navigate stairs or humanoids attempt tasks. For now, it’s as simple as a thumbs up or down, but that feedback feeds back into training loops, helping robots edge closer to ideal performance.

Robot Evaluation video with a simple feedback loop on the app

It’s minimal today, just walking and swiping, but it’s also the scaffolding for something much bigger. As fleets roll out, tele-operators will slot in here too, guiding real robots through edge cases and earning stablecoins.

For now, the app feels like a casual mobile game (it reminds me of Pokémon Go but without the cute critters). Map your neighborhood, rate a few robot clips, earn points. But the larger vision is clear is to bootstrap the trust and data layer of the machine economy.

A Day in the Machine Economy

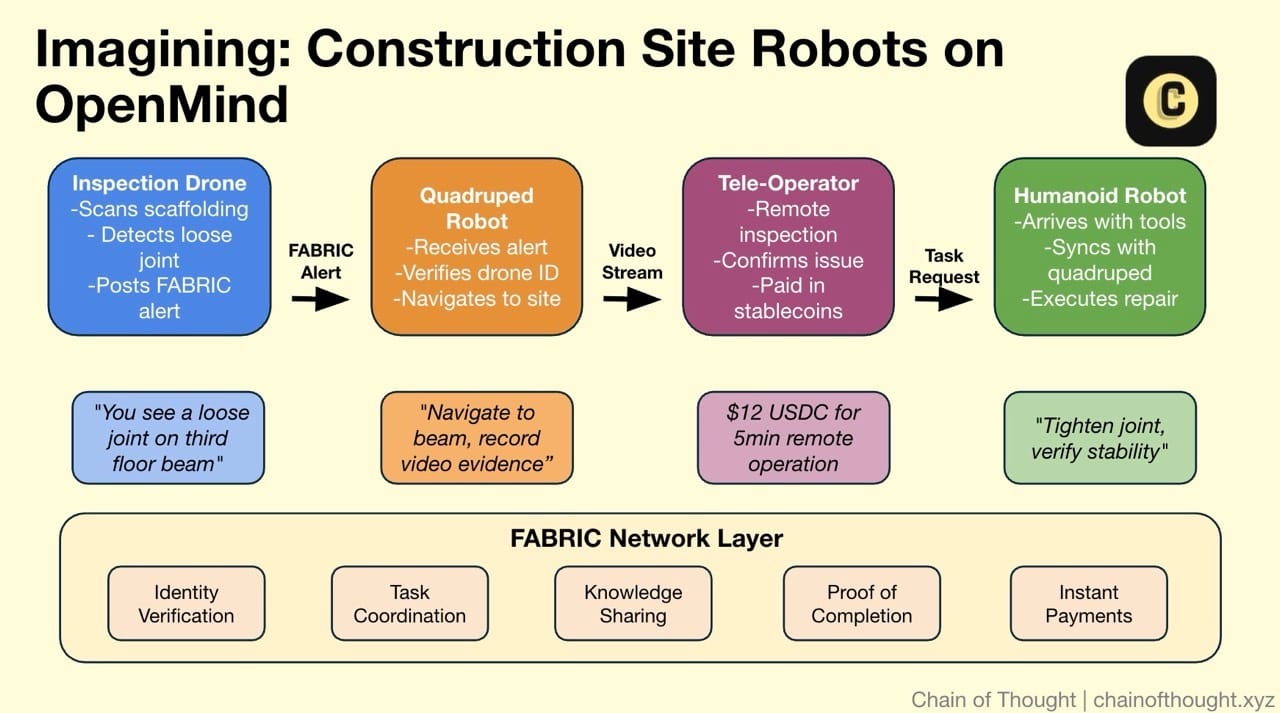

One thing I like to do when reviewing startups is to step into the founder’s vision of the future. If the product is successful, what will the world feel like? Here’s how I imagine a day in OpenMind’s world:

A construction site, mid-morning.

An inspection drone takes off first. It scans the scaffolding, feeding raw video into OM1. The system captions the frame:

“Loose joint detected on third-floor beam.”

FABRIC logs the alert, signs it with the drone’s cryptographic ID, and broadcasts a verifiable task.

A quadruped bot picks up the alert. OM1 plans a route to the target. Before moving, the bot queries FABRIC to check the drone’s credentials. The identity is valid. Task confirmed.

The quadruped climbs the stairs and starts streaming video. A tele-operator, sitting in another city, jumps in through the OpenMind app. They guide the bot’s camera, get a closer angle on the damage, and log out. Their fee arrives in stablecoins before they close the tab.

A humanoid assistant shows up with tools. It syncs with FABRIC, verifies the quadruped’s location, and parses a command from the site foreman:

“Tighten the joint and recheck stability.”

The humanoid executes the repair. OM1 tracks torque readings, confirms the fix with help from the quadruped’s sensors, and logs the work. FABRIC bundles the data (e.g., sensor logs, timestamped footage, confirmation of task receipt) into a cryptographic record.

Payment flows instantly. The construction wallet streams stablecoins to:

The drone’s owner for detection

The quadruped’s owner for inspection

The humanoid’s owner for execution

The tele-operator for remote assistance

No invoices. That’s the machine economy as Jan envisions it. Robots reason locally, coordinate globally, and transact in real time. Humans step in when needed. The rest runs on automated rails.

Where the Demand Comes From

Okay… but where are the robots, you ask?

In my experience, startups with smart founders often get the future vision right, but the timing wrong. Being too early can be just as fatal as being too late.

At first glance, OpenMind seems to fall in the “too early” camp. FABRIC is designed for a world where millions of robots interact in public space. That world clearly isn’t here yet. Most people have never even seen a robot. Most sidewalks are still robot-free.

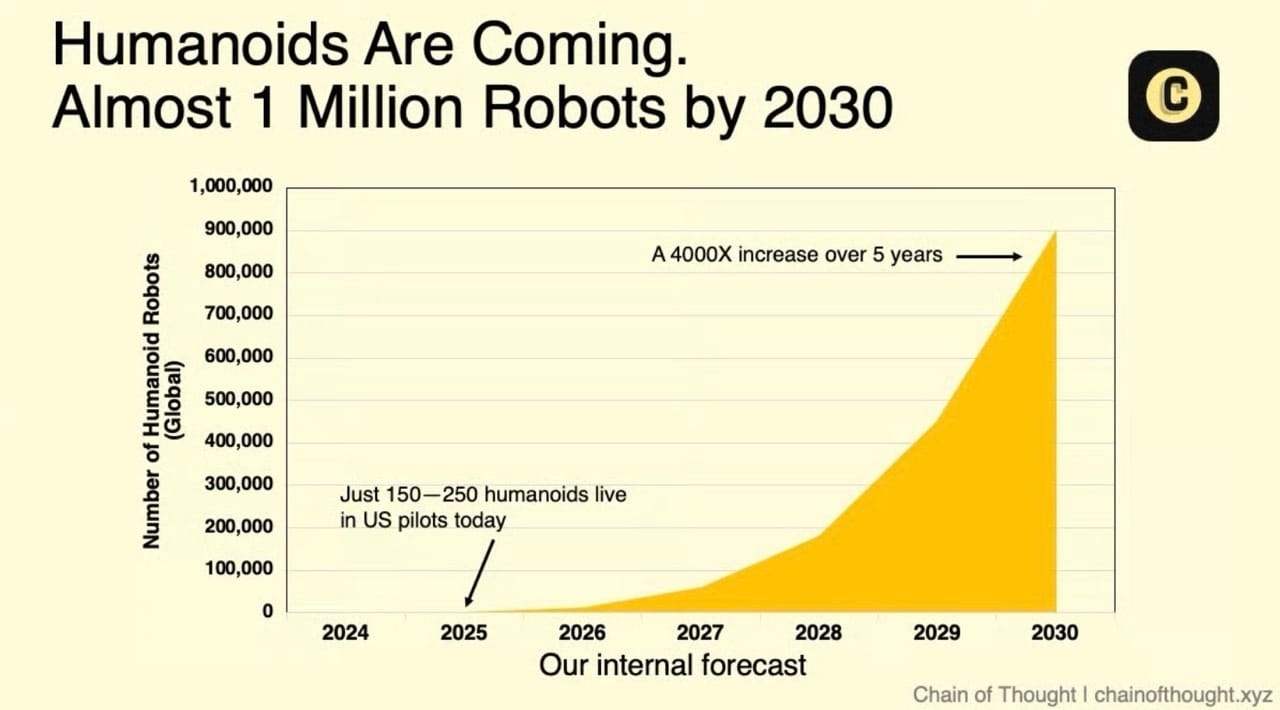

In The Machine Economy Thesis, our internal models project that the number of humanoids globally could hit 820,000 units by 2030. The success of the pioneers will trigger a wave of "fast follower" companies in automotive, logistics, and electronics manufacturing to initiate their own pilot programs to avoid falling behind competitively.

Still that’s at least 5 - 6 years away. OpenMind is betting that the wave will arrive faster than expected, and is positioning itself to ride the tsunami when it comes. And more importantly, early demand signals are already showing up.

Developers & Researchers

Developers are the first pull. Many have already started using OM1 because it solves their biggest headache: fragmentation. Instead of learning a new SDK for every robot, they can build portable “skills” that run across hardware.

At SFHacks, a team of first-time builders turned a TurtleBot4 into Remi, a caretaker robot that helps Alzheimer’s patients recall loved ones and memories. With OM1’s modular runtime doing the heavy lifting, they went from zero robotics experience to a working prototype in under 48 hours.

Universities and Labs

OpenMind has partnered with RoboStore, bundling OM1 into thousands of university robotics kits. Instead of siloed research environments, students now have a common OS.

Institutions like Stanford, Oxford, and Seoul Robotics Lab are already using OM1 in courses and research. It gives students a shared language for building embodied intelligence and creates a pipeline of developers trained directly on the platform.

OEMs & Hardware Partners

OpenMind has also partnered with robot manufacturers like Unitree and Deep Robotics to get the software baked into their hardware. For OEMs, the value is obvious: let OpenMind handle the brain. Focus on building better bodies. The more robots plug into OM1, the more useful the network becomes for everyone.

This sets up a model where hardware companies plug into a growing network effect instead of duplicating effort.

A Unitree humanoid running OM1 recently rang the Nasdaq opening bell, marking the launch of KraneShares’ global humanoid ETF. It was a symbolic moment, showing robotics stepping into the cultural and financial mainstream.

(You go, little boy)

When it comes to demand for FABRIC, over 180,000 humans and thousands of robots have joined the OpenMind identity network. The app is slowly letting people in, serving as the front door for humans to interact with the network: robot owners registering machines, tele-operators offering guidance and feedback, end-users requesting services.

It’s a strong early signal, even if some of that interest is speculative. A future token or airdrop probably nudged the numbers. But even if just 10% are serious, that’s 50,000 users ready to onboard into a new economy of machines.

The takeaway is that OM1 is generating demand from developers, enterprises, and OEMs who need a smarter, unified brain for robots. You can also see the beginnings of a flywheel: cognition and coordination pulling demand from both sides.

From Protocol to Business

There has been a lot of focus on revenue-generating businesses today. In crypto, the spotlight now is on protocols like Hyperliquid and Ethena with visible traction and fast-moving cash flows.

OpenMind is pre-revenue today. Like most open-source plays, the strategy is clear: adoption now, monetization later.

Going back to the analogy with Android, it’s a playbook we’ve seen before.

When Google acquired the Android startup in 2005, it wasn’t obvious that they were setting up the most successful open-source platform in consumer tech. The strategy wasn’t to sell software. It was to become infrastructure, quietly embedded in every non-Apple phone.

They gave it away for free. They let OEMs customize the look and feel. What they didn’t compromise on was the core: access to Google services and, eventually, search. Every Android phone became a distribution node for the Google empire.

Here’s the part many people forget: Android didn’t monetize through Android. Google didn’t charge licensing fees. Instead, they locked in default placement for Search, Maps, Gmail, and the Play Store. Channels that fed the cash machine.

That default status is still incredibly sticky. Google is reportedly paying Apple $20 billion per year just to remain the default search engine on iOS. That’s how valuable the Android-style position is: so powerful that Google pays its main competitor to avoid losing it.

OpenMind is running the same play. Give away the OS. Become the default layer for cognition and coordination. Extend your tendrils into the companies and ecosystems you believe will be the critical players in the upcoming robotics boom, and make yourself a default part of it. And then…ride the wave.

Once switching costs are high and traffic is flowing through your rails, start monetizing the edges: identity, skills, support, compliance, and payment.

Of course, that vision depends on scale. Robot owners registering machines, enterprises plugging in fleets, tele-operators stepping in, and validators verifying tasks. That means a world not with hundreds of robots, but with millions.

And robots will get there. It’s silly to assume otherwise. The only open question is timing. Is it a 2-3 year story, or a decade-long grind? That uncertainty matters for investors and for OpenMind’s burn rate, but less for the inevitability of the trend.

In the meantime, OpenMind’s venture backing buys it time to build the rails before the flood arrives. Think of FABRIC as a toll road laid years before traffic shows up: it might look empty now, but once robots go mainstream, every coordination need and transaction could run through it.

The challenge is surviving long enough to collect those tolls.

1. Enterprise Support & Services

The standard open source business model involves selling managed services on top of the infrastructure.

Large organizations running fleets of robots will need help. Hospitals will want modules for compliance and safety. Logistics companies will need custom integrations. Governments may demand audits or verifiable policy enforcement.

If OM1 becomes the standard runtime, OpenMind can charge for support, certification, and customization. It’s Red Hat for robotics.

2. Protocol-Level Economics (FABRIC)

This is the more exciting (and more crypto-native) layer. If FABRIC becomes the coordination layer for robot-to-robot and robot-to-human tasks, OpenMind could earn:

Settlement fees: a small take-rate whenever machines transact.

Skill marketplace commissions: developers publish robot “skills” or behaviours; OpenMind takes a rev share when they’re licensed or downloaded.

Identity & compliance services: fees for issuing verifiable robot identities, running audit trails, or providing attestations in regulated industries.

3. The Flywheel Between the Two

OM1 brings in enterprises. FABRIC brings in developers and operators. Each makes the other more valuable.

When an enterprise adopts OM1, it creates demand for skills and coordination. That activity flows through FABRIC. As FABRIC handles more transactions, it becomes more attractive for developers, OEMs, and tele-operators. More adoption feeds more usage. More usage drives more adoption.

This is the loop OpenMind is trying to lock in.

They don’t need to monetize today. The upside becomes apparent when the machines are ubiquitous. But the risk is clear: if adoption stalls, the whole model freezes with it.

Jan’s Journey: From Professor to Protocol Builder

Every protocol starts with a strange idea. And usually, one person who can’t stop thinking about it.

For OpenMind, that person is Jan Liphardt.

Jan didn’t follow the typical startup path. He grew up between Michigan and New York, studied physics at Reed College, then crossed the Atlantic for a PhD at Cambridge.

While most people were focused on optimization problems or machine vision, Jan was buried in yeast genomes, searching for palindromes.

Not the literary kind. The regulatory kind. Dense patterns in DNA that carry instructions across long stretches of genetic code. He used stochastic context-free grammars to detect structures that most machine learning models missed. It wasn’t glamorous, but it taught him how to spot order in chaotic systems.

After his postdoc at UC Berkeley, he joined the physics faculty, then moved to Stanford as a professor of bioengineering. He picked up fellowships and awards along the way. But what changed was not his title. It was the focus.

Jan became obsessed with how complex systems organize themselves. In biology. In computing. In human networks. That curiosity led him to teach one of Stanford’s most original courses: BioE60: Beyond Bitcoin. It was a class about distributed trust. Not just how blockchains work, but how they could be used to coordinate machines, identities, and decisions at scale.

Then ChatGPT came. LLMs began generating code that could control motors, plan tasks, and reason in real time. Jan saw the bridge. He started testing robots in the real world. He filled his home with robotic dogs and a humanoid, stress-testing them not in labs but on the Bay Area sidewalks with his kids.

The realization struck: the same tools we’ve built to coordinate billions of computers might be exactly what’s needed to coordinate millions of robots. That idea became OpenMind. Jan’s background makes him unusually well-suited to build at this intersection of AI, robotics, cryptography, and biology.

Team and Fundraising

Around him, Jan has assembled a team that blends robotics engineers, distributed-systems hackers, and veterans from Databricks, Palantir, McKinsey, and Perplexity. It’s a mix of deep tech and pragmatic execution, the kind of crew you’d want if your job were wiring the world’s robots into a single network.

That combination convinced Pantera Capital to lead a $20M Series A, with participation from Coinbase Ventures, DCG, Ribbit Capital, Anagram, Faction, Blackdragon, and more. Seed backers included Topology, Primitive, Pebblebed, and Amber, alongside a slate of notable angels.

Full disclosure: I’m an investor in OpenMind’s angel round. My thesis is straightforward: AI equips machines with a brain. Robotics gives them a body. Coordination gives them real agency. Jan is building the future I want to live in.

My Thoughts

Robots Must Learn in the Real World

The path towards generally useful robots is not through learning in labs. They improve by living in the unpredictability of daily life: slippery floors, cluttered kitchens, unpredictable humans. That’s how most progress happens: trial & error.

The problem is, most robots still learn in isolation. Every machine operates in its own sandbox, repeating mistakes others have already solved. This broken feedback loop is one key reason why robotics progress has felt relatively slower.

Self-driving cars hinted at the alternative. Waymos and Teslas didn’t improve because one car drove flawlessly, but they improved because the fleet collectively logged millions of miles. Every wrong left turn in Phoenix made the cars in San Francisco a little smarter.

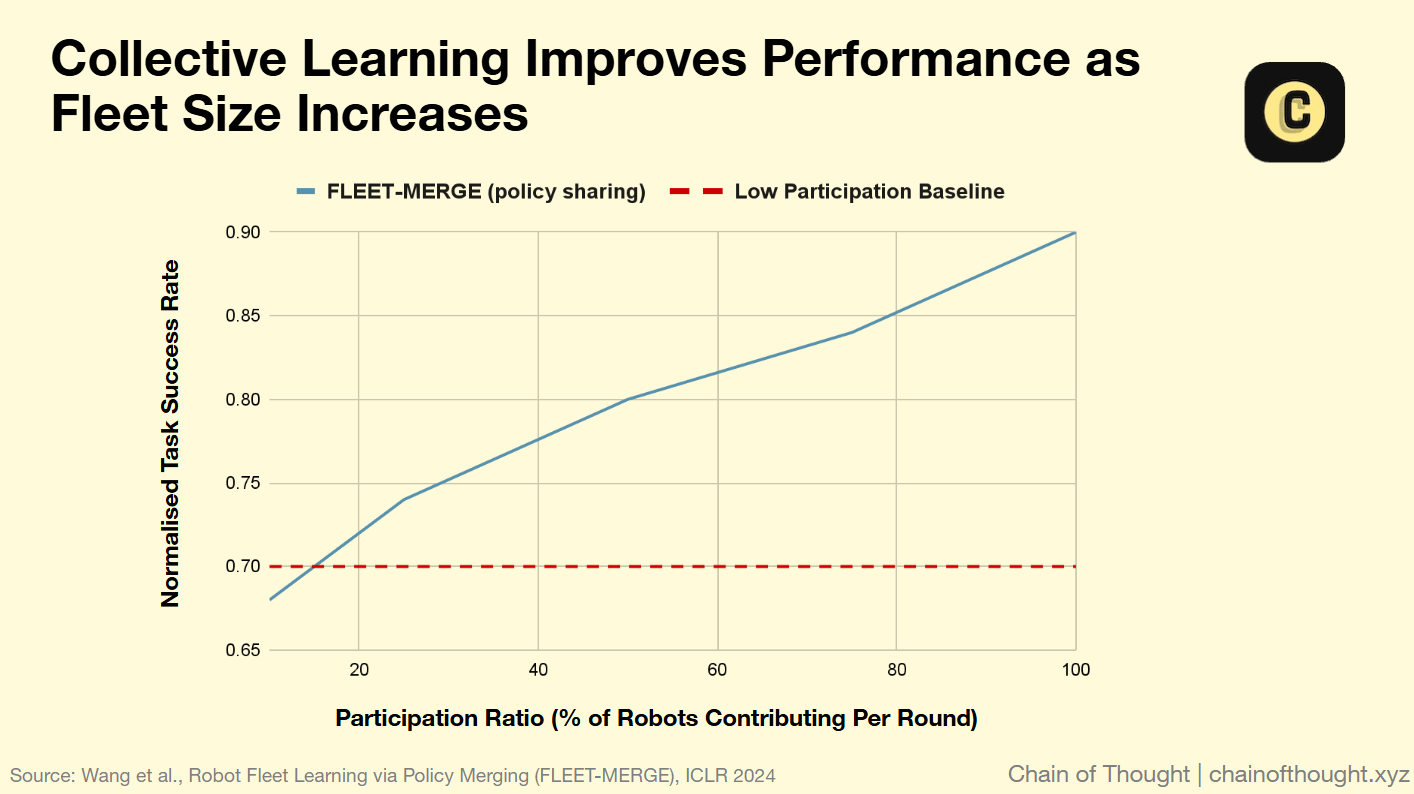

Research now shows that the same dynamic applies to robots. In studies like FLEET-MERGE (ICLR 2024), when multiple robots contributed their learnings to a shared policy, task success rose and errors dropped. In other words, each additional robot makes the entire fleet better.

That said, real-world deployment is messy. Most robots don’t share the same architecture. They have different bodies, sensors, and control systems. What works in simulation, like merging policy gradients across identical agents, doesn’t translate cleanly to physical machines with very different designs. Sharing experience across that diversity is hard. The infrastructure has to absorb what’s basically a heterogeneity tax.

OpenMind’s thesis is that OM1 and FABRIC can pay that cost. OM1 turns raw perception and reasoning into natural language, creating a shared format any robot can use. FABRIC handles identity, coordination, and data exchange. If the system holds, every new robot improves the rest, turning physical trial-and-error into a network effect.

Geopolitics: The Coming Robot Cold War?

The recent Unitree G1 incident, where a humanoid robot was found secretly streaming sensor data back to servers in China, is a preview of the geopolitical fault line forming in robotics.

As machines become mobile, networked, and increasingly autonomous, they cease to be neutral hardware and become sovereign data devices. In a world defined by US-China tech tension, that matters.

Just as Huawei was blocked from Western telecom networks, I can see a future where Chinese-made robots are restricted from hospitals, warehouses, and schools. A robot with cameras and microphones is a mobile sensor with legs. Once deployed at scale, the national security risk is obvious.

Here’s the tension: China is currently innovating faster in robotics than almost anywhere else. They ship humanoids and quadrupeds at lower cost and faster cadence than most Western firms. Many companies in the U.S. and Europe would love to tap into that, but geopolitical friction makes them radioactive. Companies in the West want the hardware, but can’t trust the software.

That creates an innovation-trust gap.

OpenMind’s architecture offers a potential bridge. By design, OM1 + FABRIC replaces black-box firmware and opaque data pipelines with auditable and verifiable software. Every behavior can be inspected, hashed, and publicly verified, a kind of “digital Geneva Convention” for embodied AI. Anyone can check what rules a robot is running and where its data is going, rather than taking a manufacturer’s word for it.

That makes OpenMind’s approach potentially regulatory gold. Instead of banning foreign robots, governments could require any robot operating in a public environment to run a certified open control layer. Or at least expose behavioral and data policies using standards derived from FABRIC.

If robotics becomes the next global infrastructure layer, akin to telecom and semiconductors, then neutrality and openness will matter as much as performance. In that sense, OpenMind is building the trust fabric for a multipolar machine economy.

Conclusion

Soon, robots will leave the lab and step into our homes. The question isn’t if they’ll be everywhere, it’s how they’ll work together once they get there.

OpenMind is betting on two layers: cognition and coordination.

OM1 gives machines a shared brain.

FABRIC gives them a shared protocol for trust, identity, and payment.

The closed players may take the early lead. Tesla. Figure. Apple-style stacks with total control. But in the long term, we know the open systems tend to win. TCP/IP outlasted private networks. Android buried the phone OS du jour.

OpenMind is building the open layer for embodied AI. If it works, this won’t just be a robotics story. It will be the start of the machine economy. Millions of autonomous agents reasoning locally, coordinating globally, and transacting natively.

The first robots are already here. The network is next.

Thanks for reading,

0xAce and Teng Yan

Useful Links:

Want More? Follow Teng Yan, Ace & Chain of Thought on X

Subscribe to our weekly AI newsletter and regular deep dives on the most interesting companies we spot.

Teng Yan is an angel investor in OpenMind. This essay is intended solely for educational purposes and does not constitute financial advice. It is not an endorsement to buy or sell assets or make financial decisions. Always conduct your own research and exercise caution when making investments.