The Chain of Thought Decentralized AI Canon is my running attempt to chart where AI and crypto collide, overlap, and fuse into something new.

Each Canon zooms in on a different layer of this economy (trust, data, cognition, coordination, robotics) and then pans out to show how they connect into a single system of intelligence and incentive.

The Canons shift as the terrain does. New essays, data, and debates reshape the map every time we learn something worth revising.

Subscribe if you want to follow this live experiment in mapping the intelligent economy.

Most AI models still run on faith.

“We promise it’s GPT-4.5.”

“We promise we trained on the dataset we said we did.”

“We promise the output’s legit.”

But we can’t check. The model is a black box. The server is opaque. There’s no proof of what ran, how it ran, or whether the answer was manipulated along the way.

Verifiable AI is a response to that gap. It’s a growing stack of techniques, including mathematical proofs, crypto-economic incentive design, and secure hardware, all aimed at replacing claims with evidence. Not just “we did this,” but “here’s proof we did.”

If it works, verifiability becomes the baseline for high-stakes deployments: public infrastructure, decentralized finance, anywhere outputs have weight.

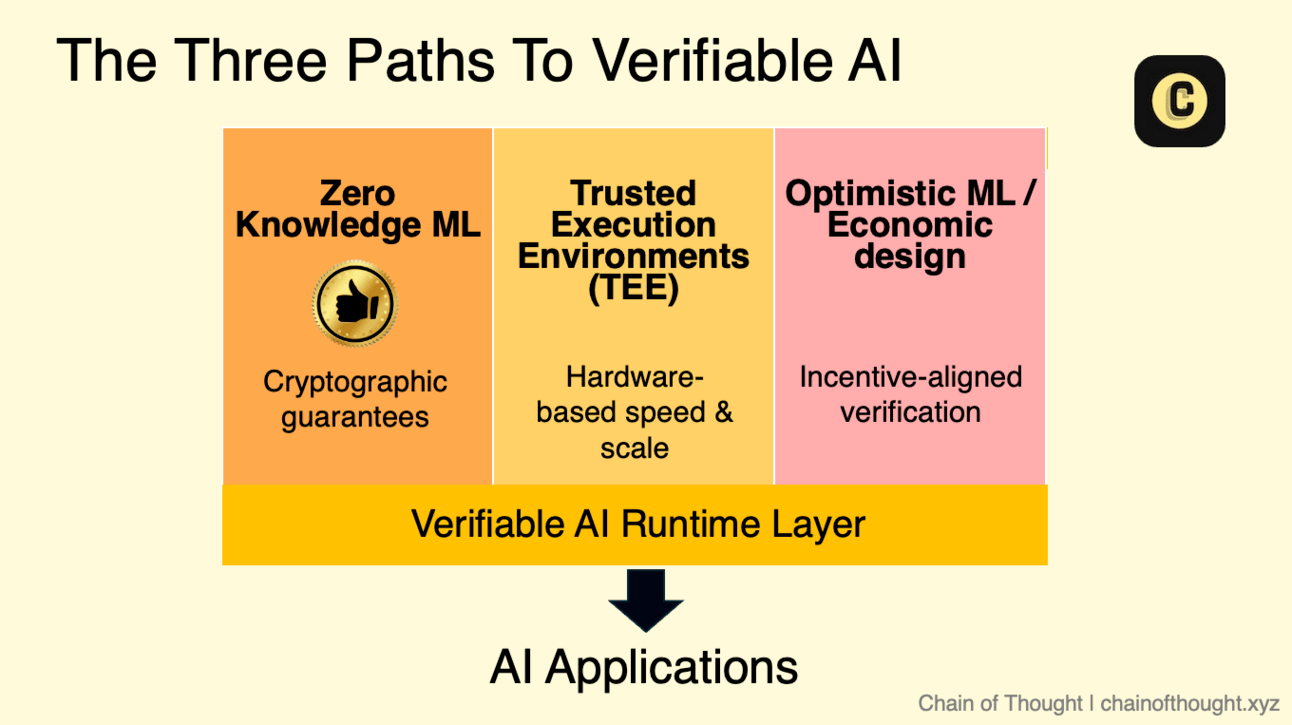

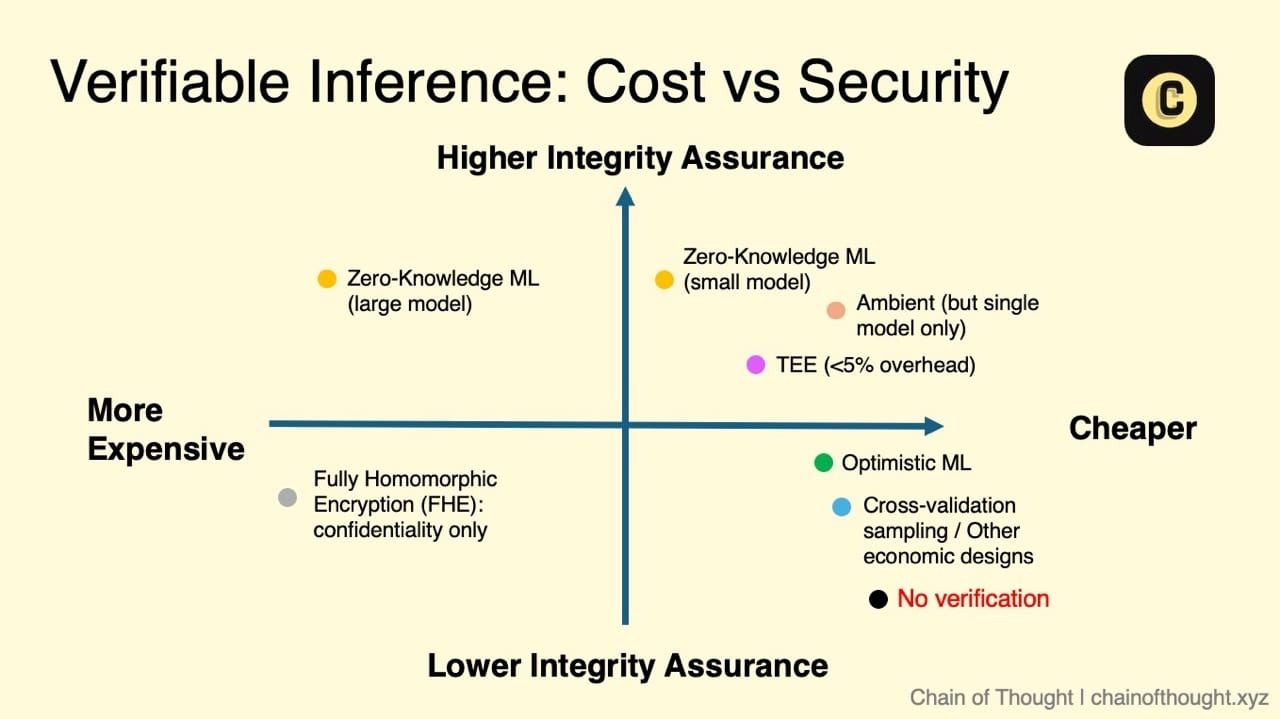

But it’s a messy path. Builders face a familiar bind, what I’ve started calling the Verification Trilemma. You can optimize for:

Cryptographic rigor with zero-knowledge machine learning (zkML), which offers mathematical guarantees but comes at a steep computational cost.

Economic scale with optimistic methods. Fast and cheap, but only if incentives are tuned and watchers stay sharp.

Practical speed with trusted execution environments (TEEs). Easy to deploy, but you’re betting on the integrity of the chip.

No single solution clears all three corners. Each comes with tradeoffs, each breaks somewhere.

What we’re presenting here is a map of the land. We’ll break down each approach: how it works, where it’s usable today, and what it might unlock if it keeps improving. This is our attempt to trace how verifiable AI might actually land in production.

Why Verification Matters: Moving Beyond “Just Trust Us”

Blockchains and AI are, in many ways, inherently at odds.

One is deterministic, transparent, and built around small, repeatable computations spread across many machines. The other is probabilistic, compute-heavy, and opaque, relying on massive parallelism and GPU acceleration.

Running a 70-billion-parameter LLM on every blockchain node is impossible today. So we compromise. The model runs off-chain, in a centralized environment. Only the final output makes it back on-chain. A black box.

But that black box raises critical questions. A few concrete examples:

The Bait-and-Switch: You pay a premium to access GPT-4.5, but without proof, how can you be sure you’re not being served a cheaper, less capable model like GPT-4?

DeFi Trading Agents: Off-chain trading agents optimize yields and execute trades, but without verifiability, users can’t confirm that risk constraints or strategies are being followed. Could the agent secretly deviate from its stated logic, exposing users to unforeseen risks?

Today, AI services rely on brand reputation, legal contracts, and goodwill. For low-stakes applications, that’s often enough. But crypto offers something AI inherently struggles to provide: trust without centralization.

As AI begins to steer real-world decisions in finance, medicine, and public governance, the cost of blind trust skyrockets. When the stakes rise, the demand for decentralized, auditable AI will explode.

"Don't trust, verify" must be woven into the fabric of the system today, while the systems are still malleable. Once path dependence kicks in, it’ll be too late.

The TAM for Verification

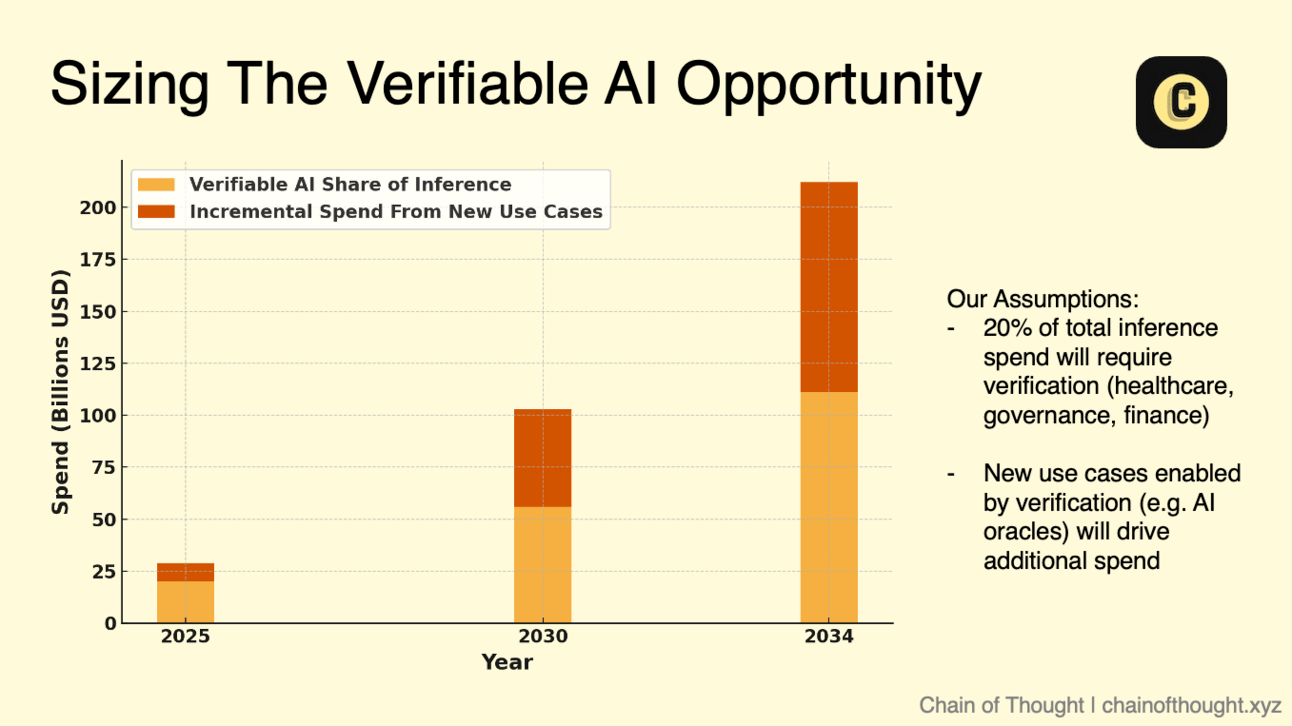

Let’s also get practical. Why should anyone care about verification? Is there real demand for verifiable inference? No one seemed to be modeling it, so we took a first pass.

Short answer: yes. Demand is sizeable.

We estimate that verifiable inference could represent an annual opportunity of $100+ billion by 2030, growing to more than $212 billion by 2034.

Here’s the rough logic:

Global AI inference spend is expected to reach $255 billion annually by 2030. We believe that is a conservative base case.

We assume 20% of that spend will require verifiability, such as in healthcare, government, finance, and regulated enterprise software.

On top of that, new use cases emerge that only make sense once inference is provable. These include AI oracles, content provenance systems, confidential RAG, and other trust-sensitive applications discussed later in this report.

Part I: The Verification Trilemma

There’s no silver bullet for verifiable AI. Instead, every design is a compromise, balancing cryptographic rigor, economic viability, and real-world usability. There are three primary approaches.

1. Zero-Knowledge Machine Learning (zkML): The Path of Cryptographic Purity

zkML aims to prove that a model ran correctly, without revealing its internals. Instead of trusting a server to run a model as claimed, zkML lets a prover compute an output, like y = f(x), and attach a proof that y truly came from applying f to x.

A verifier can check this proof in milliseconds, even if the model took minutes to run. The input, the output, or the model weights are not leaked.

This is the cleanest form of trust. No hardware assumptions, no trusted third parties. Just math.

That promise makes zkML appealing for high-stakes settings like healthcare, identity, and finance, where correctness and confidentiality are both non-negotiable. It also makes zkML useful in systems where AI decisions feed into smart contracts, where the output isn’t enough. You need proof.

But proving is slow and expensive today.

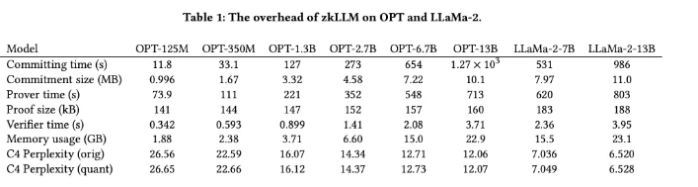

Generating a zero-knowledge proof for a single token of a large AI model can take minutes and cost 1,000 to 10,000x more than raw inference. A 13-billion-parameter LLM can take up to 15 minutes (though we note this is on less powerful A100 chips). Extending that to a typical 200-token response means days of proof generation.

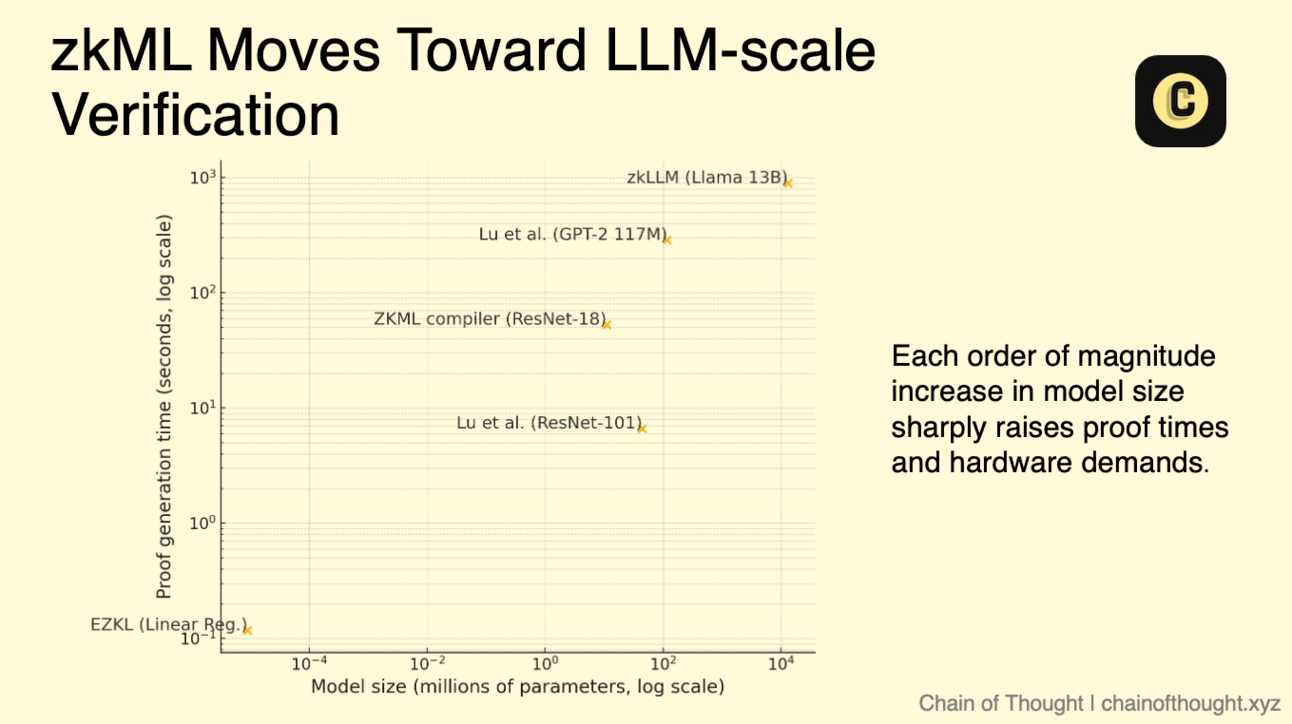

Over the past year, research has pushed the limits of zkML, towards practical use for models in the 10s of millions of parameters.

Proofs have been generated for models like ResNet-101 (44M params, ~6.6s), GPT-2 (117M params, ~287s), and VGG-16 (15M params, ~88s). These are progress markers.

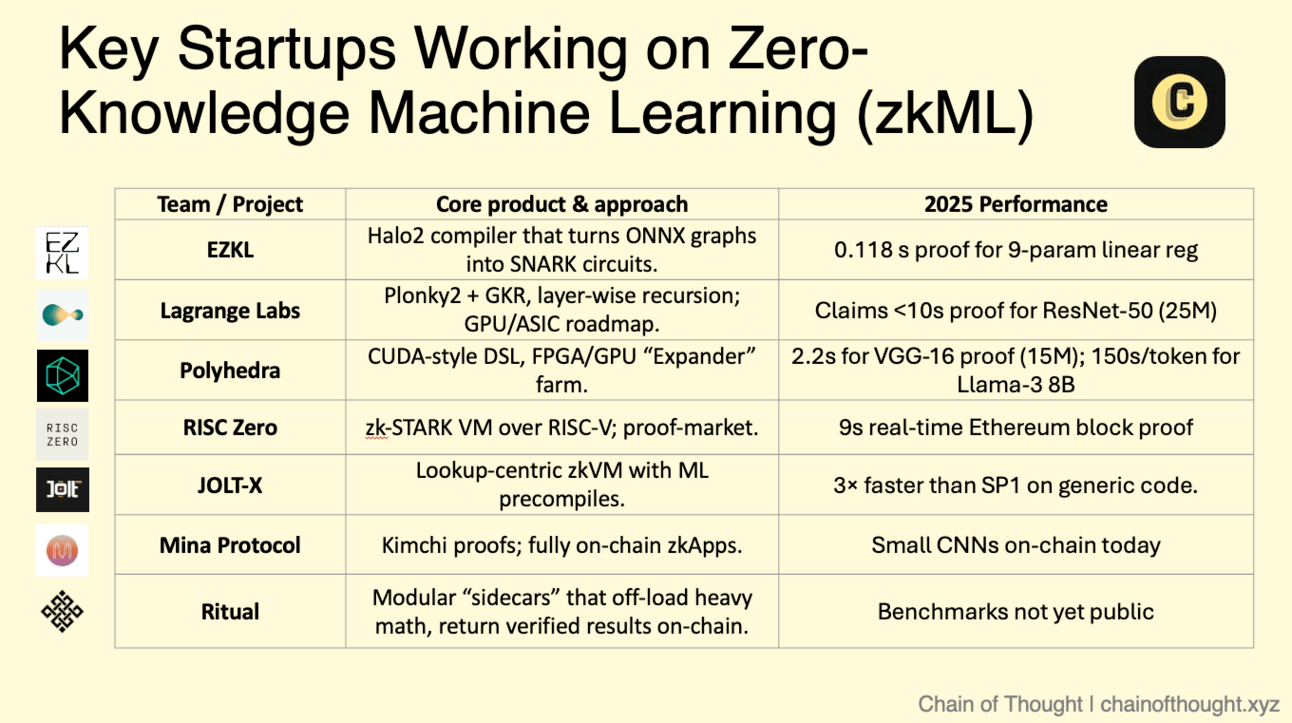

Protocols like EZKL, RISC Zero Bonsai, and JOLT-X aim to make small-to-mid scale zkML practical. Also:

DeepProve, built by Lagrange Labs, claims to support sub-10 second proofs on ResNet-50 (25M params) with ASIC backends.

Mina Protocol’s zkML library has already executed small, provable models fully on-chain.

These tools push mid-sized transformers under 100M parameters into early production territory.

Scaling proofs is still brutal. Each order-of-magnitude jump in model size sharply raises proof times and hardware demands. Circuit design adds its own fragility: a single bug in a handcrafted circuit can yield a valid-looking proof for an incorrect result, a catastrophic failure in on-chain systems. Verifiability, done carelessly, becomes an attack vector.

One common misconception is that zkML provides full privacy. zkML hides inputs and weights, but it usually exposes the model architecture. Circuit construction reveals layer counts, operations, and flow, which can be proprietary IP. Some teams now obfuscate the layout by padding circuits with decoy layers, turning model topology into a cat-and-mouse game.

There’s also the “who proves?” problem. If the model provider generates the proof, they might see sensitive inputs. If the user wants to preserve full input privacy, they may have to do it themselves, a non-starter for most consumer hardware. Either way, universal, lightweight verification remains an aspiration.

There’s promising work on solving these. Tools like ZKPROV introduce circuit-private SNARKs that mask execution structure, though at the cost of more constraints and complex setup. Long-term, the vision of proving massive LLMs in real-time depends on breakthroughs in recursive proofs, better compilers, and specialized hardware.

💾 Field Programmable Gate Arrays

On the hardware front, FPGAs are emerging as the stopgap of choice.

These are reprogrammable chips that can keep pace with fast-evolving proof systems, unlike ASICs, which lock you into a fixed architecture and require multi-year lead times. FPGA-based provers are already delivering 10–1000x speedups over CPUs, with as much as 90% lower energy per proof.

Polyhedra’s GKR engine deployed on FPGAs is one example, bringing multi-order reductions in proof time. ASICs are coming, for sure, but they’ll make sense only once the zkML stack stabilizes.

Proof composition is another lever. Recursive SNARKs like Halo and Nova can compress many intermediate steps into a single, quickly verifiable proof. Instead of verifying every step, you verify the whole computation at once. Combined with batch verification (checking many similar inferences together), these techniques enable hundreds of proofs to be validated in parallel.

When paired with hardware acceleration, zkML starts to look economical.

If these lines of progress hold, we may soon be able to bring models with 10M–100M parameters into range for real-world deployment, at verification costs just a few multiples above inference.

We’re already seeing early use cases:

On-chain reputation scoring

Biometric KYC (e.g. with Worldcoin, which we wrote about recently)

AI anti-cheat systems in games

So what?

zkML delivers math-level guarantees without trusting hardware or humans. Today those proofs are slow and costly, so adoption will start with sub-100 M-parameter models in high-value niches.

To cap this off, here are some of the key teams working on zkML:

2. Optimistic Machine Learning (OpML): The Path of Economic Stability

Optimistic methods can cut proof cost by 99 % at the price of delayed finality. Where zkML aims for mathematical certainty, OpML takes a looser, incentive-driven approach. It borrows from optimistic rollups: assume the result is valid unless challenged.

In a typical setup, a node runs a model, posts the output on-chain, and stakes a bond of tokens. If someone else spots an error or manipulation, they can challenge the result within a predefined dispute window. That triggers an interactive verification game, where both sides walk through the computation step by step to identify any fault. If the output is valid, the challenger loses. If it’s wrong, the bond is slashed.

With a fixed seed and known parameters, it’s possible to deterministically confirm that a specific input produced a specific result. Provenance is provable.

The promise of OpML is cost and speed. You don’t need to prove every result in advance. You only pay for verification when someone calls foul. That makes OpML attractive for long-running or batch computations. One prototype ran LLaMA-2 13B verification on Ethereum. Verification costs dropped to cents per inference.

But the tradeoffs are real.

You need at least one honest verifier. If no one’s watching, or if the reward for challenging is lower than the effort, it’s easy for invalid outputs to slip through. Early optimistic ML pilots discover that challenge tips must exceed AWS spot prices plus opportunity cost, otherwise nobody verifies.

Finality is delayed. Every result must sit in a limbo period before it’s finalized. That’s fine for asynchronous or archival tasks, but ill-suited to applications where decisions must be trusted instantly.

Early phase vulnerabilities. Smaller, newer systems are at risk of “honeypot” attacks, where provers sneak in bad outputs, assuming no one will challenge in time.

Some designs try to tighten the loop: random spot checks, seeded errors to catch inattentive nodes, and stiffer penalties for misbehavior. The faster the task, the harder it is to verify. Spot-checking a 300-millisecond inference is tricky; building an incentive layer around that is even trickier.

OpML has taken a backseat to the other approaches and, for now, still feels like a research experiment in crypto-economic fault tolerance. It’s clever. It might scale. But it’s not yet ready to anchor trust in production-grade AI.

So what?

OpML slashes verification cost by paying only when challenged, but reliability hinges on well-funded watchers and tolerable settlement lags. Good for batch analytics, shaky for real-time control loops.

3. Trusted Execution Environments (TEEs): The Path of Pragmatic Performance

If zkML represents the gold standard of verification, TEEs are the practical tool already in use.

A TEE is a secure enclave inside a processor. Code runs in isolation, shielded even from the host operating system or the cloud provider. When the computation finishes, the enclave signs a message: code X ran on input Y and produced output Z. That attestation can be checked off-chain or written on-chain.

In practice, TEEs let AI feel normal. No need to recompile your model or construct complex circuits. You get fast inference, full attestation, and privacy protections for inputs and weights, without leaving the PyTorch or TensorFlow ecosystem.

Benchmarks show less than 2% overhead for LLM-scale inference in confidential GPU environments. Azure demos show LLaMA-2 7B running in under 300 milliseconds with full attestation. NEAR’s team recently published a paper showing that even with end-to-end attestation, there are low overheads.

The hardware support has expanded quickly:

NVIDIA H100 and Blackwell GPUs now offer enclave-style isolation. You can wrap a PyTorch model in a secure enclave in just a few lines of code.

Azure’s confidential GPU instances secure compute, memory, and I/O under a unified attestation scheme.

Intel TDX eliminates SGX’s memory constraints and enables multi-socket support, crucial for large model inference.

But there’s a catch, and it’s a big one. You’re placing faith in the chip itself. The entire TEE model rests on trusting Intel, AMD, or NVIDIA not to make a mistake. When they do, the isolation breaks. History is full of reminders:

Foreshadow and SGX.fail broke Intel’s SGX through side channel attacks and leaked sensitive data

AMD’s SEV has also faced attacks like CounterSEVeillance, proving that no vendor is immune

While these flaws are eventually patched, the pattern is clear: a single hardware bug can bring the entire security model down. For systems built to minimize trust, that’s a fundamental tension.

And so we see TEEs as a pragmatic foundation, not a final destination.

What could accelerate adoption? First, if AWS, Azure, and GCP offer confidential GPU instances at prices close to standard, usage will climb fast. Second, a stretch of time, say, a year, without new side-channel breaks would help restore confidence in the hardware itself.

In the meantime, two trends are emerging:

Hybrid systems: Projects like RISC Zero’s zkAttest combine TEEs for execution with zk-proofs for aggregate verification. The TEE runs fast, and then a lightweight zk-proof validates batches of results. Together, they bridge performance and auditability.

Open hardware: The field is waiting for its “Linux moment”. Open enclaves on architectures like RISC-V could eventually replace the single-vendor trust bottlenecks that plague today’s TEEs. It’s early, but gaining momentum.

So yes, TEEs are fast. Yes, they integrate cleanly with existing ML workflows. And yes, they’re already deployed in production. But the “T” still means “trusted.” And for adversarial environments, that trust may be too much to ask.

So what?

TEEs can already provide verifiability and privacy for production AI systems with sub-2 % overhead, yet every guarantee rests on unbroken silicon. They are the fastest path today, but not the final destination.

Other Verification Designs

Beyond OpML, several crypto-based designs aim to make compute verifiable through economic pressure rather than formal proofs.

One approach relies on sampling-based verification. Multiple nodes independently execute the same task, and a randomly chosen subset checks the results. If the outputs match, the task is accepted. If discrepancies emerge, the protocol can penalize the dishonest party, typically by slashing a staked bond.

Atoma explored a model it called “sampling consensus,” which built on these ideas. But like others in the space, the team ultimately shifted toward TEEs, favoring performance and production-readiness over probabilistic guarantees.

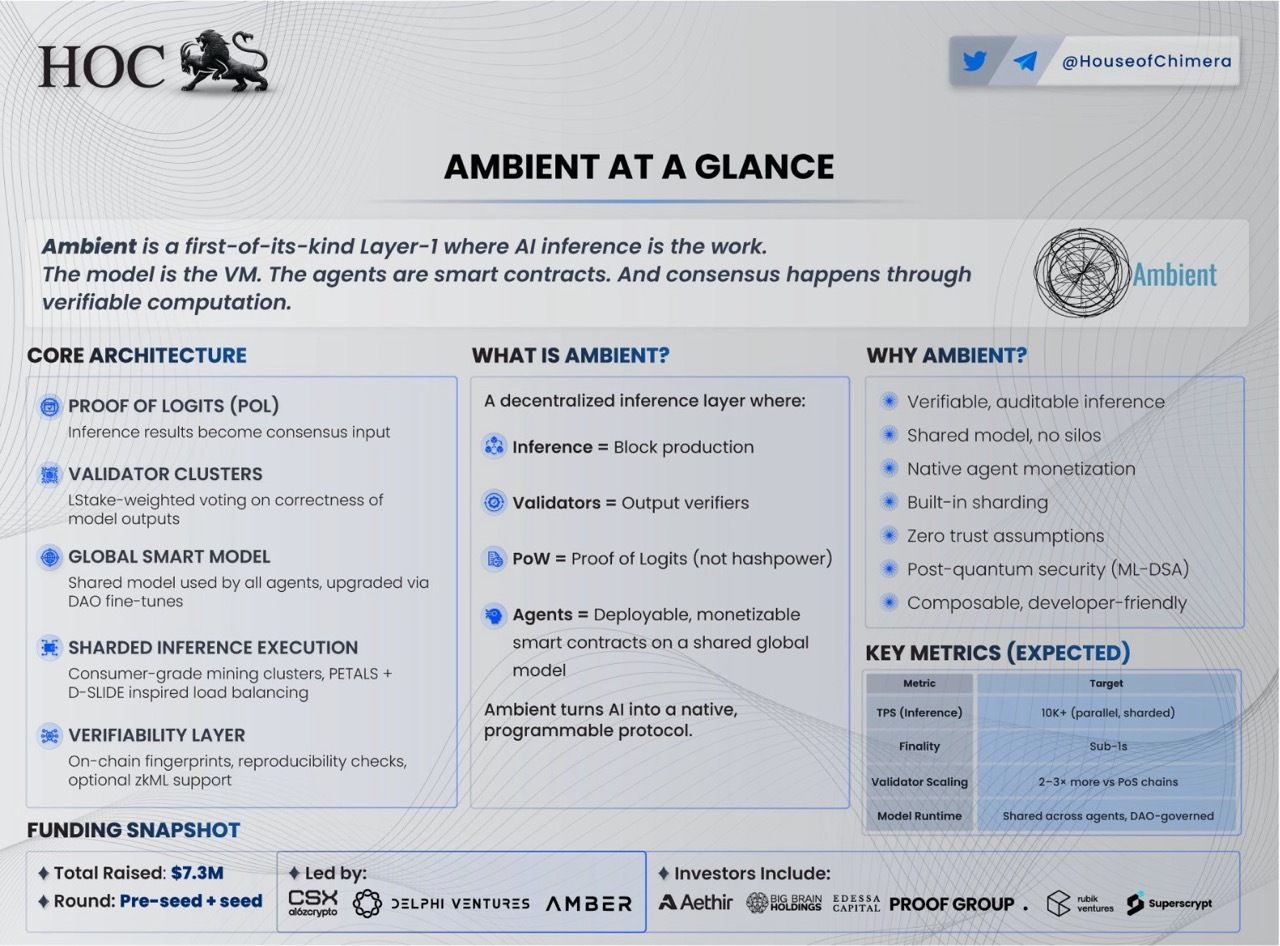

And then there’s Ambient.

Ambient is building a Solana SVM–compatible L1 that runs on a custom proof-of-work scheme: Proof of Logits (PoL). Instead of mining meaningless hashes, workers run a frozen 600B foundation model. They publish hashes of the model’s intermediate logits. Validators can audit those hashes with just 0.1% overhead. Each one acts like a cryptographic fingerprint, binding output to a specific set of weights and inputs.

Ambient positions PoL as a midpoint between the mathematical certainty of zkML and the economic pragmatism of optimistic schemes: the network forfeits generality (only one canonical model) to achieve millisecond-level verification without specialised hardware.

Current Feasibility: Ranking Verification Approaches

Not all verification strategies are equally mature. Over the next few years, we expect the adoption curve to look something like this:

TEEs > zkML (small models) > OpML

TEEs are already in production. They support fast, private, attestable inference without requiring model rewrites or framework switches. In the short term, most systems that need verifiable AI will lean on TEE infrastructure.

zkML has the cleanest trust model, with no need to believe in hardware vendors or centralized platforms. It offers cryptographic proof that a model ran faithfully. But today, it remains slow, expensive, and limited to smaller models.

OpML is elegant in theory but brittle in practice. It relies on economic incentives, honest participation, and timely challenges. Without careful incentive design and active monitoring, the system breaks down. Few current use cases can tolerate that fragility.

We can map these approaches across two dimensions: cost and security guarantees:

In low-risk domains like ad targeting or UX personalization, the marginal utility of verification is small. If the worst-case outcome is a bad recommendation, most teams will rely on logs, not proofs. The added cost and latency aren’t worth it.

But when the stakes rise, the calculus changes.

In healthcare, governance and adversarial environments like DeFi, a bad inference can’t be brushed off. Misdiagnoses and asset losses carry real consequences. In those environments, verifiability becomes essential.

Two things could accelerate adoption quickly:

Better tooling: When verifiability is a simple checkbox in PyTorch or TensorFlow, more teams will use it. Data scientists can use libraries like Keras or HuggingFace as usual, then snapshot the model for ZK proof generation.

A public failure. A high-profile AI mistake, especially in a critical domain, could shift public expectations overnight. “Verifiable by design” might become a regulatory floor.

Most inference today still runs without verification. But the infrastructure is forming quietly beneath it. Good, because when the demand hits, there won’t be time to build from scratch.

Part II: What Verifiable AI Unlocks

Beyond simply adding verifiability to current AI use cases, we are most excited about the new design space that verification unlocks. Verifiable AI enables a new class of systems: intelligent, autonomous, and composable by default.

1. AI Oracles and Data Feeds

Today’s oracles deliver single facts. They leave the devs to interpret what those facts mean.

Verifiable AI changes that. If you can prove that a model ran on a specific input and produced a specific output, you can start to trust decisions, not just data.

Now we can get oracles that actually reason.

Imagine a DeFi protocol that adjusts loan terms based on a user’s on-chain behavior/credit score. Or a smart contract that triggers based on a volatility forecast.

That unlocks composability. Contracts could call into AI models the same way they query price feeds today. But instead of asking “What’s the ETH/USD price?” the question becomes “Is this address a high-risk borrower?”, “Does this image violate content policy?”, or “Has this DAO proposal been miscategorized?”

Because each inference comes with proof, other contracts can compose against it in the same way they consume price feeds today. The trust boundary moves from human operators to machine-checked evidence.

2. Autonomous Agents with Enforced Constraints

The next generation of on-chain agents will be AI-driven systems with policy-level authority: to route trades, propose allocations, and manage risk across complex environments.

That power needs guardrails.

Verifiability turns those limits into enforceable constraints. A trading agent, for instance, could be required to post a proof that its strategy stayed within approved risk parameters. If it deviates, the system rejects the action.

In DAOs, an autonomous treasury agent might propose fund allocations. But it must prove the proposal respects community-defined rules, say, no more than 20% spent on marketing.

When agents act on behalf of a system, their reasoning must be checkable. Not just interpretable, but provable. This keeps the agents accountable and the outputs bounded. Constraint enforcement will define whether autonomous agents will become useful infrastructure or… unpredictable liabilities.

3. Decentralized Model Marketplaces

In our earlier piece on decentralized training, we made a simple claim: AI models are becoming commercial assets..

“Decentralized training offers a way to build models without relying on corporate backing, and to fund that work (which is highly capital-intensive) through shared incentives.

These trained models can be represented as on-chain tokens. Participants earn a share by contributing compute, supplying data, or verifying results.”

But if models are assets, trustless infrastructure becomes the bottleneck. Most users don’t want to download raw weights or blindly trust opaque APIs. They want a middle ground—credibility without custody. Outputs they can trust without needing to trust the provider.

That starts with provenance. When you query a model, you should get more than just a string of text. You should get a signed statement: this specific model produced this output, using this input, under these conditions. Systems built with TEEs or zkML make that possible.

That’s the foundation for a new kind of AI marketplace. One where users pay per output, providers can protect their IP, and compute networks earn by proving correct execution. Models, data, and compute all settle on a shared substrate of verifiability.

And there’s a growing set of high-leverage use cases where verifiability adds immediate value for builders:

Biometric & KYC proofs. Regulatory pressure is intensifying. World (formerly Worldcoin) already use zero-knowledge proofs to confirm uniqueness without revealing identity. ZK-KYC lets users prove properties about themselves, like citizenship and age, without exposing underlying data. In privacy-sensitive sectors, this kind of selective disclosure is likely to become default.

Content authenticity and model provenance. The EU AI Act and the U.S. NIST framework stress explainability and traceability, but stop short of requiring cryptographic verification. That’s beginning to shift. Watermarking tools can embed origin signals in generated content. Verifiable inference proves how it was produced. One tags the output. The other proves the pipeline. Together, they offer a path toward cryptographically grounded provenance.

Confidential analytics and retrieval-augmented generation (RAG). Enterprises want to query sensitive internal data, like healthcare records or financial reports, without exposing the raw data. TEEs with remote attestation are being piloted for this use case, enabling secure search and inference over private vector databases. Money is already flowing here.

The Road Ahead: Some Thoughts

1. Integrate with Workflows, or Bust

For verifiability to matter, it has to be invisible. Exporting a model for verified inference should feel as familiar as saving it to TorchScript or ONNX. We’re not there yet, but the edges are starting to blur.

Tools like ezkl let developers convert ONNX models into zero-knowledge circuits. RISC Zero’s Bonsai offers a cloud runtime where you can execute Rust or TorchScript models and receive a cryptographic proof that the computation was correct. These are early steps, but they hint at what’s coming.

When “verify=True” becomes a one-liner in your code, adoption will follow fast.

2. Economic Gravity

Verification only works if someone’s willing to check and pay for it.

The economics of decentralized AI inference are still unsettled. Proof verification doesn’t run itself, and if it doesn’t pay, the whole system stalls. Early designs mix solver fees, MEV-style tips, and staking mechanisms to keep provers honest and verifiers alert. Aligning incentives is key: without it, correctness is just optional overhead.

One emerging model is the decentralized proof market. Bonsai envisions a job board for proofs: users post inference tasks with bounties, and provers compete to solve them. It’s freelance ZK. There’s even early evidence of MEV-like behavior (bid sniping, latency games, and economic arbitrage).

Another approach borrows from oracles. Dragonfly Research outlines a multi-prover scheme where each party stakes collateral. If the outputs disagree, the dishonest node gets slashed.

They all share a core insight: verification needs to make economic sense.

3. Verifiability ≠ Ethics

“A cryptographic proof that a model did something wrong is still a proof.”

A model can be biased, harmful, or manipulative, and still generate a flawless proof. That’s the uncomfortable truth.

Most verification tools today are built for single-shot inference. They prove that a specific model produced a specific output, given a specific input. But real trust doesn’t live in one output. It lives in behavior over time. Did the trading agent stay within bounds across 10,000 decisions? Did the AI assistant consistently respect user norms?

Getting there will require new primitives. Not just proof-of-output, but proof-of-behavior. Systems that can verify trajectories, policies, and bounded reasoning.

Accuracy is another missing piece. Proofs can show what a model did, but not whether it was right. Startups like Mira are tackling some of the hard challenges here, breaking outputs into smaller claims and using ensemble verification to check each one.

And some tasks will always resist definitive checks. Policy advice, negotiation, writing are open-ended domains where there’s no canonical “correct” output. Even when the process is authentic, the result may be misleading, manipulative, or just subtly off. The more subjective the task, the less verifiability can say about what should have happened

Conclusion

The cost of blind trust is becoming harder to justify. AI agents will move money, allocate resources, and shape decisions. Blockchains taught us to verify transactions. Now we need to verify reasoning.

The stack is still early, but the direction is clear. Just as HTTPS made secure communication the norm, verifiable inference will make machine decisions accountable by default.

Once proofs are fast and cheap, the black box finally opens. We will know what model ran, on what input, and under which constraints. That makes AI auditable not just after the fact, but at the moment of action.

The endgame is an AI layer that can explain itself, prove its steps, and stand up to scrutiny. We’re building the accountability layer for the age of AI.

Cheers,

Teng Yan

This essay is intended solely for educational purposes and does not constitute financial advice. It is not an endorsement to buy or sell assets or make financial decisions. Always conduct your own research and exercise caution when making investments.

Teng Yan is an investor in Ambient.

Appendix A: Startups and Teams working on TEEs in Web3

Phala operates one of the largest decentralized TEE clouds. In 2025, Phala launched version 2.0, bringing secure TEE computing directly to Ethereum, and introduced GPU TEE capabilities for hardware-level AI security. It has over 22,000 nodes and integrations in the Polkadot ecosystem.

Atoma targets private, verifiable AI through secure GPU-TEE enclaves that run models without exposing data or weights, while encrypted “Buckets” store state for multi-agent collaboration. Every inference is logged on-chain with token-based incentives driving the network. Active in 2025 with testnet launches and partnerships like Sui for AI integration. It enables applications such as private DeFi analytics and collaborative AI agents.

Arcium builds a decentralized confidential computing network using MPC and TEEs for secure, private executions in Web3. It processes sensitive data for AI agents and dApps without exposure, excelling in DeFi risk assessment and collaborative AI. Arcium verifies off-chain computations on-chain, offering resilience against hardware flaws via its hybrid model.

A Cosmos-SDK chain where every validator operates inside Intel SGX enclaves, Secret Network supports “Secret Contracts” that execute with encrypted inputs, state, and outputs, backed by remote attestation for correctness. Live use cases include front-running-proof DEXs, private NFTs, and on-chain ML. Community governance and integrations with Axelar drive adoption.

Oasis separates consensus from execution, with confidential ParaTimes running inside SGX enclaves to support apps like private credit scoring and model hosting. Proofs are relayed to the base layer, and partnerships with io.net extend TEE compute to decentralized AI workloads. Its architecture promotes scalability, making it ideal for privacy in DeFi, healthcare, and collaborative AI.

Automata offers a “TEE Coprocessor,” a multi-prover system integrating with EigenLayer as an AVS. Isolated enclaves manage tasks like MEV-resistant order matching or agent inference, posting hardware-attested proofs to rollups such as Linea, Scroll, and Worldcoin. In 2025, its mainnet AVS launch and Scroll integrations advanced confidential AI.

Inco Network serves as a universal confidentiality layer for EVM chains, combining FHE and TEEs to enable private states in smart contracts. It supports zkApps for verifiable, encrypted executions in DeFi, gaming, and identity management. Inco incentivizes validators through tokenomics to uphold confidential processing.

Super Protocol provides a TEE-based confidential cloud for Web3, integrating with networks like Phala to secure AI and DeFi executions. Developers run LLMs and agents in isolated enclaves, with remote attestation protecting data and models. Features include containerized workloads, token incentives for nodes, and blockchain integration for on-chain proofs.

iExec delivers a confidential AI platform blending blockchain and TEEs, allowing developers to create privacy-preserving AI apps with data ownership and monetization. It supports secure execution on untrusted infrastructure, ideal for Web3 agents and DeFi.

Appendix B: Startups & Teams working on zkML

ezkl is an open-source toolkit transforming ONNX-trained neural networks into Halo2-based SNARK circuits. Developers export models from PyTorch or TensorFlow, generating on-device proofs of inference without revealing inputs or weights, verifiable on-chain. Applied in biometric KYC, content moderation, and anti-cheat, ezkl's 2025 updates include iOS integrations and enhancements.

DeepProve from Lagrange Labs is a GPU/ASIC-optimized zkML framework using GKR, delivering proofs up to 1,000× faster than predecessors. It supports scalable verifiable inference for neural networks, with sub-10s proofs on models like ResNet50, suited for DeFi risk engines and AI oracles. In 2025, v1 release and NVIDIA partnerships advanced its prover network on EigenLayer. Lagrange's ZK Coprocessor enables data-rich Web3 apps, ensuring verifiability without on-chain overhead.

RISC Zero’s Bonsai is a high-performance zkVM for Rust and TorchScript, featuring parallel proving and recursion for STARK-based proofs via a cloud API. It verifies AI-generated game logic, DAO votes, or confidential computations on-chain. 2025's R0VM 2.0 release included benchmarks and integrations like Boundless for ZK execution. Bonsai simplifies zkML deployment, supporting scalable verifiability in Web3.

Mina’s lightweight zkML library facilitates ONNX model inferencing and proof generation entirely on-chain, with proofs under 1MB. It enables privacy-preserving scoring for health screenings or CAPTCHAs, verified in Mina’s SNARK-based ledger. 2025 devnet releases integrated ONNX enhancements and mainnet upgrades. Mina's succinct blockchain design makes zkML ultra-efficient, ideal for resource-constrained environments.

Giza is a zkML platform on Starknet, facilitating verifiable ML workflows by converting ONNX models to ZK circuits for on-chain validation. It preserves privacy in inputs and weights, applicable to DeFi oracles and AI agents. Developer tools support PyTorch exports and recursion, enabling fast proofs for complex models.

Ritual employs zkML to verify models, inputs, and outputs on-chain. Its Infernet coordinates off-chain computations with proofs ensuring integrity, ideal for trustless agents and oracles. Ritual's 2025 mainnet launch includes token incentives for compute providers. It reshapes AI-blockchain fusion, supporting verifiable content and DeFi fraud detection.

Building on the JOLT zkVM, JOLT‑X incorporates lookup table optimizations for ML inference, proving ~70M-parameter transformer models in minutes. Targeting L2 rollups, it enables verifiable moderation and fraud detection. 2025's v1 release added recursion and 2x speed improvements over rivals.

Hardware Acceleration

Cysic creates ZK hardware accelerators for zkML, with ASICs and GPUs optimizing proof generation for ML models. It slashes latency and costs, integrating with Succinct's SP1 and JOLT for L2 rollups and DeFi. Cysic's 2025 chips enable real-time zkML for oracles and agents.

Fabric Cryptography develops custom silicon for ZK proofs, accelerating zkML in Web3 via its Verifiable Processing Unit (VPU). It optimizes SNARK/STARK generation for frameworks like ezkl and RISC Zero, supporting verifiable NFT valuations and DeFi models. Funded in 2025, Fabric targets faster, cheaper proofs for decentralized AI.